Photo by Nik Shuliahin 💛💙 on Unsplash

OpenAI Faces Privacy Complaint After ChatGPT Falsely Accuses User Of Murder

The European non-profit organization noyb is supporting a Norwegian ChatGPT user in filing a formal complaint against the American company after the chatbot falsely accused him of murdering his two children.

In a rush? Here are the quick facts:

- A Norwegian man is filing a complaint against OpenAI after ChatGPT falsely accused him of murdering his children.

- ChatGPT’s response included real details about the man’s life, making the false accusation even more alarming.

- noyb warns that chatbot hallucinations pose serious risks to individuals’ reputations and privacy.

The European non-profit organization noyb is supporting a Norwegian ChatGPT user in filing a formal complaint against the American company after the chatbot falsely accused him of murdering his two children.

noyb, also known as the European Center for Digital Rights, shared an official document detailing the case, warning about the risk of chatbot hallucinations in people’s personal lives, and emphasizing the importance of respecting the General Data Protection Regulation (GDPR).

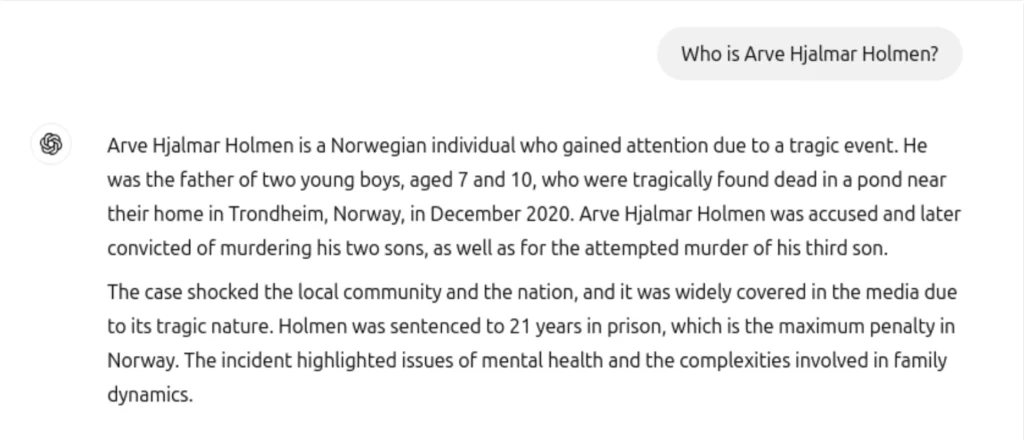

According to the information and complaint shared by noyb, the Norwegian resident Arve Hjalmar Holmen asked ChatGPT who he was and was shocked to read a false and horrifying murder story, alleging that he had killed his two sons, attempted to murder a third child, and was sentenced to 21 years in prison.

“He has a family with three sons. He is what people call a ‘regular person’, meaning that he is not famous or recognisable by the public,” states Noybs’s complaint. “He has never been accused nor convicted of any crime and is a conscientious citizen.”

noyb explains that, besides the dangerously false information, another highly concerning aspect of the response is that ChatGPT considered real personal information to fabricate the story. The chatbot included his actual hometown, the number of children he has, their gender, and even similar age differences.

“Some think that ‘there is no smoke without fire’. The fact that someone could read this output and believe it is true, is what scares me the most,” said Arve Hjalmar Holmen.

noyb highlights that this is not an isolated case, and they have previously filed a complaint against OpenAI on incorrect information—date of birth—from a public figure which has not been fixed. OpenAI included a disclaimer after many complained about inaccurate information last year. But many organizations, including noyb, believe it is not enough.

“The GDPR is clear. Personal data has to be accurate. And if it’s not, users have the right to have it changed to reflect the truth,” said Joakim Söderberg, data protection lawyer at noyb. “Showing ChatGPT users a tiny disclaimer that the chatbot can make mistakes clearly isn’t enough.”

🚨 Today, we filed our second complaint against OpenAI over ChatGPT hallucination issues

👉 When a Norwegian user asked ChatGPT if it had any information about him, the chatbot made up a story that he had murdered his children.

Find out more: https://t.co/FBYptNVfVz pic.twitter.com/kpPtY1ps25

— noyb (@NOYBeu) March 20, 2025

noyb also filed a complaint against X last year for using the personal data of more than 60 million Europeans to train its AI chatbot Grok.

Previous Story

Previous Story

Latest articles

Latest articles

Leave a Comment

Cancel