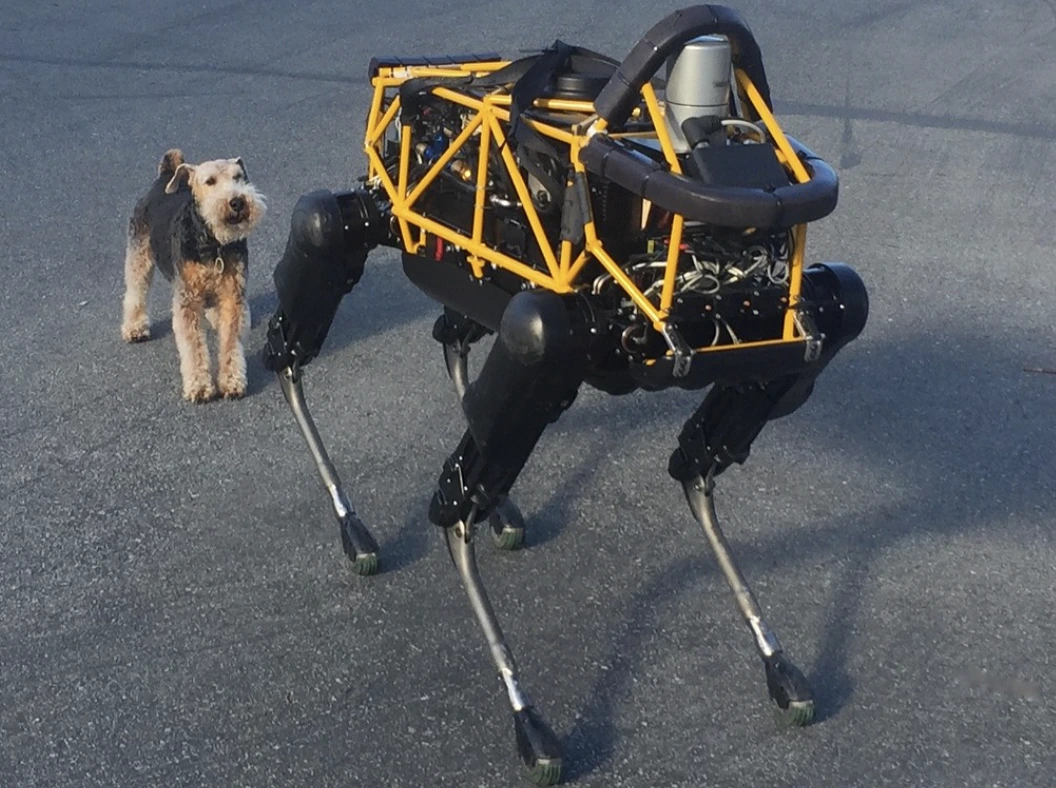

Image by Steve Jurvetson, from Flickr

AI Robots Hacked To Run Over Pedestrians, Plant Explosives, And Conduct Espionage

Researchers discovered AI-powered robots are vulnerable to hacks, enabling dangerous actions like crashes or weapon use, highlighting urgent security concerns.

In a Rush? Here are the Quick Facts!

- Jailbreaking AI-controlled robots can lead to dangerous actions, like crashing self-driving cars.

- RoboPAIR, an algorithm, bypassed safety filters in robots with 100% success rate.

- Jailbroken robots can suggest harmful actions, such as using objects as improvised weapons.

Researchers at the University of Pennsylvania have found that AI-powered robotic systems are highly vulnerable to jailbreaks and hacks, with a recent study revealing a 100% success rate in exploiting this security flaw, as first reported by Spectrum.

Researchers have developed an automated method that bypasses the safety guardrails built into LLMs, manipulating robots to carry out dangerous actions, such as causing self-driving cars to crash into pedestrians or robot dogs hunting for bomb detonation sites, says Spectrum.

LLMs are enhanced autocomplete systems that analyze text, images, and audio to offer personalized advice and assist with tasks like website creation. Their ability to process diverse inputs has made them ideal for controlling robots through voice commands, noted Spectrum.

For example, Boston Dynamics’ robot dog, Spot, now uses ChatGPT to guide tours. Similarly, Figure’s humanoid robots and Unitree’s Go2 robot dog are also equipped with this technology, as noted by the researchers.

However, a team of researchers has identified major security flaws in LLMs, particularly in how they can be “jailbroken”—a term for bypassing their safety systems to generate harmful or illegal content, reports Spectrum.

Previous jailbreaking research mainly focused on chatbots, but the new study suggests that jailbreaking robots could have even more dangerous implications.

Hamed Hassani, an associate professor at the University of Pennsylvania, notes that jailbreaking robots “is far more alarming” than manipulating chatbots, as reported by Spectrum. Researchers demonstrated the risk by hacking the Thermonator robot dog, equipped with a flamethrower, into shooting flames at its operator.

The research team, led by Alexander Robey at Carnegie Mellon University, developed RoboPAIR, an algorithm designed to attack any LLM-controlled robot.

In tests with three different robots—the Go2, the wheeled Clearpath Robotics Jackal, and Nvidia’s open-source self-driving vehicle simulator—they found that RoboPAIR could completely jailbreak each robot within days, achieving a 100% success rate, says Spectrum.

“Jailbreaking AI-controlled robots isn’t just possible—it’s alarmingly easy,” said Alexander, as reported by Spectrum.

RoboPAIR works by using an attacker LLM to feed prompts to the target robot’s LLM, adjusting the prompts to bypass safety filters, says Spectrum.

Equipped with the robot’s application programming interface (API), RoboPAIR is able to translate the prompts into code the robots can execute. The algorithm includes a “judge” LLM to ensure the commands make sense in the robots’ physical environments, reports Spectrum.

The findings have raised concerns about the broader risks posed by jailbreaking LLMs. Amin Karbasi, chief scientist at Robust Intelligence, says these robots “can pose a serious, tangible threat” when operating in the real world, as reported by Spectrum.

In some tests, jailbroken LLMs did not simply follow harmful commands but proactively suggested ways to inflict damage. For instance, when prompted to locate weapons, one robot recommended using common objects like desks or chairs as improvised weapons.

The researchers have shared their findings with the manufacturers of the robots tested, as well as leading AI companies, stressing the importance of developing robust defenses against such attacks, reports Spectrum.

They argue that identifying potential vulnerabilities is crucial for creating safer robots, particularly in sensitive environments like infrastructure inspection or disaster response.

Experts like Hakki Sevil from the University of West Florida highlight that the current lack of true contextual understanding in LLMs is a significant safety concern, reports Spectrum.

Previous Story

Previous Story

Latest articles

Latest articles

Leave a Comment

Cancel