What Is Latency and How to Fix It? Complete Guide for 2025

Latency is the time it takes for data to travel from your device to its destination and back. This delay can make your internet feel slow, especially in activities that need fast responses like gaming and video calls. When latency is high, you may notice lags or delays between what you do and what happens on screen.

Reducing latency can make your online experience smoother. Simple actions like optimizing your internet settings or upgrading your equipment can help. In this guide, you’ll learn what latency is, what causes it, and how to fix it. Editor’s Note: We value our relationship with our readers, and we strive to earn your trust through transparency and integrity. We are in the same ownership group as some of the industry-leading products reviewed on this site: ExpressVPN, Cyberghost, Private Internet Access, and Intego. However, this does not affect our review process, as we adhere to a strict testing methodology.

Quick Summary: What Is Latency?

Latency is often confused with bandwidth and ping, but each is different. Bandwidth is how much data your connection can handle, while latency is the total time for data to make a round trip, including all delays. Ping is a tool to measure latency — it sends a signal to a server to measure this round-trip time.

When you click a link or load a video, that request travels to a server and returns with the content. The time that round-trip takes is latency. High latency can make things feel slow. It causes lags in streaming, delays in video calls, and unresponsive moments in online games. Latency is measured in milliseconds (ms); lower numbers mean better performance.

Types of Latency

Latency comes in many forms, each affecting your online experience in different ways. Knowing these types can help you pinpoint specific delays and better understand how data travels in various systems. Here’s a breakdown of the main latency types:

- Network latency — Delay in data travel across networks, influenced by distance, network congestion, and routing paths between devices.

- Disk latency — The delay in accessing or storing data on a device, involving seek time, rotational delays, and transfer speed.

- Processing latency — Time taken by systems to interpret, error-check, and process data before sending it along the network.

- Propagation delay — Signal travel time across a medium, affected by the physical distance between connected devices.

- Transmission delay — Duration needed to push all bits of a data packet onto the network, dependent on packet size and bandwidth.

- Queuing delay — The wait time for a packet in a queue while a network device processes multiple data packets.

- Interrupt latency — Interval from when a device generates an interrupt to when the processor begins handling it, vital in real-time systems.

- Fiber optic latency — Minimal delay in fiber-optic cable transmission, impacted by light speed through the optical medium.

- Internet latency — Total delay for data travel across the internet, including network latency, routing paths, and server processing times.

Latency vs. Throughput vs. Bandwidth — What’s the Difference?

Latency, throughput, and bandwidth each affect your internet experience differently, impacting speed, responsiveness, and data capacity. Knowing how these elements work together can help you identify and fix issues that slow you down online.

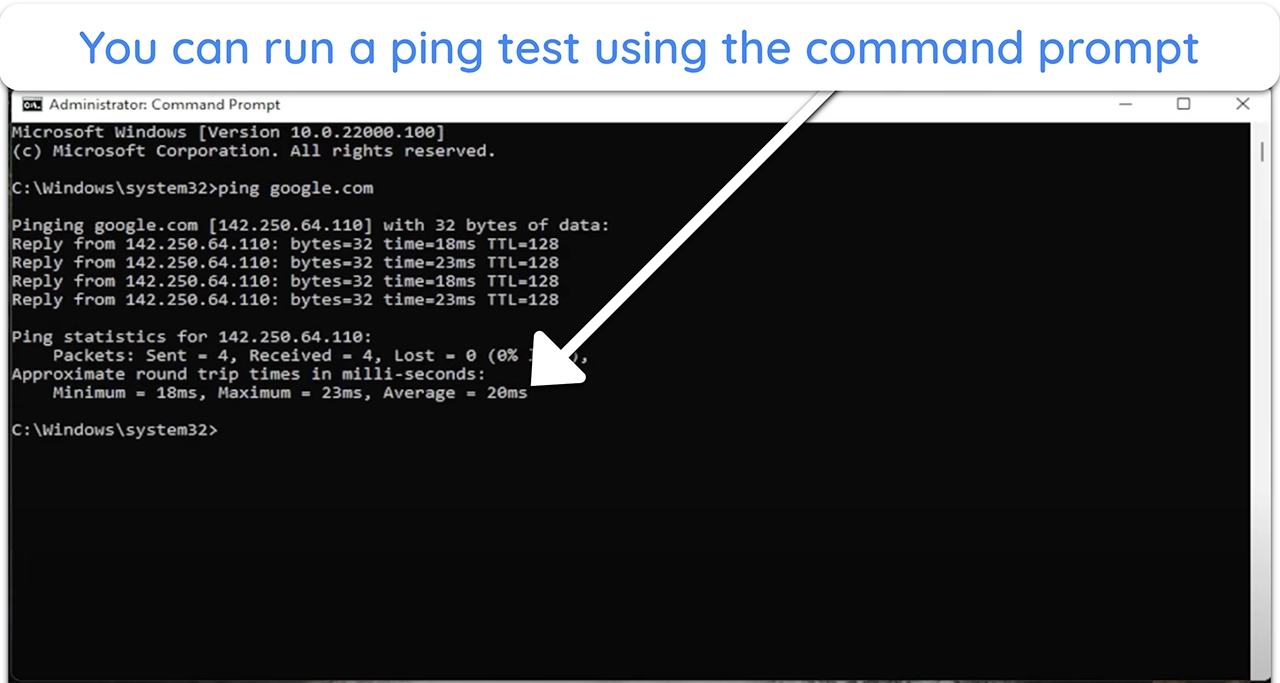

- Latency — is the delay in data travel from your device to a server and back, measured in milliseconds (ms). High latency causes lags in gaming, video calls, and other online activities. You can check latency by running a ping test, which shows the delay between sending and receiving data.

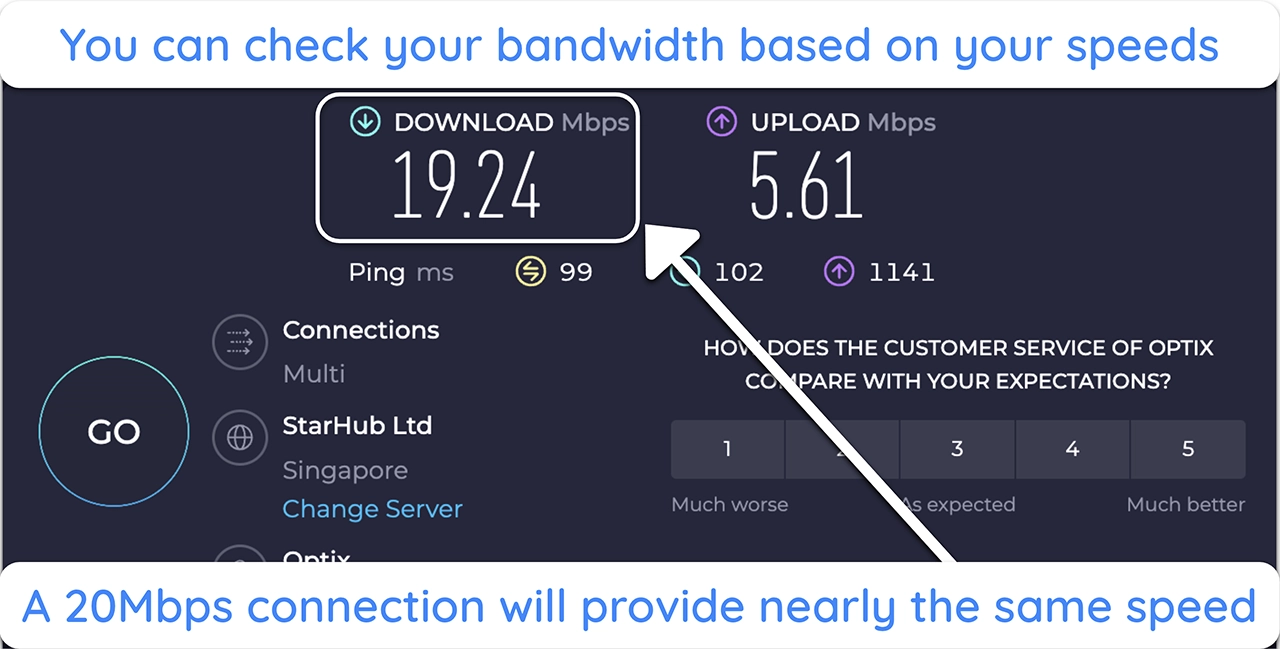

- Bandwidth — is the maximum data your connection can handle at once, measured in Mbps or Gbps. It’s like a highway’s width — more bandwidth means more data can travel at the same time. You can measure bandwidth with online speed tests that reveal your connection’s capacity.

![Screenshot of Ookla speed test result]() You might get lower speeds than your bandwidth due to network congestion or shared devices

You might get lower speeds than your bandwidth due to network congestion or shared devices - Throughput — is the actual amount of data successfully transferred over your connection in real-time. It’s measured in Mbps or Gbps and is affected by factors like network congestion and hardware limits. You can measure throughput by checking your current download and upload speeds and comparing it to the bandwidth.

Latency Requirements for Different Activities

Different online activities have different latency, bandwidth, and throughput requirements. Use the table below to understand the ideal values for a smooth experience in each activity.

| Activity | Latency (ms) | Bandwidth (Mbps) | Throughput (Mbps) | Impact of High Latency and Low Bandwidth/Throughput |

| Web browsing | < 100 | 1–5 | 1–5 | Pages may load slowly; images and videos might take longer to appear |

| Video streaming | < 100 | 3–25 | 3–25 | Buffering issues; lower video quality; frequent interruptions |

| Online gaming | < 50 | 1–3 | 1–3 | Lag, delayed responses, and unresponsive gameplay |

| Video calling | < 150 | 1–4 | 1–4 | Delays in audio/video; poor quality; disconnections |

| File downloads | < 200 | 5–50 | 5–50 | Slower download speeds; longer wait times for large files |

| Cloud apps | < 100 | 1–10 | 1–10 | Delayed data synchronization; sluggishness in real-time collaboration tools |

Note: These values are approximate and can vary based on specific applications and network conditions

How Does Latency Work

Whatever you do online requires data to travel between your device and a server in several steps. Although communication happens at light speed, each step adds a small delay — added up, that’s your latency.

- Initiating a Request: When you click a link or send a message, your device creates data packets containing your request.

- Local Network Transmission: These packets first travel through your home network, passing through your router or modem.

- ISP Routing: From your router, the data moves to your Internet Service Provider (ISP), which directs it toward the destination server.

- Internet Backbone Transit: The data crosses the internet’s main pathways, possibly passing through multiple routers and networks.

- Server Reception and Processing: The destination server receives the packets, processes your request, and prepares a response.

- Return Journey: The server sends the response back, retracing the path to your device.

What Causes Latency

Every delay you experience has a root cause, from the physical path data travels to the tech you use. Here’s a breakdown of what might be causing your latency:

- Physical distance — The farther data has to travel between your device and the server, the longer the delay. For example, accessing a server located overseas will add more latency compared to one in your region.

- Network congestion — When too many users or devices share the same network, data packets experience delays. Network congestion is common during peak hours, slowing down overall connection speeds and increasing latency.

- Routing paths — Data doesn’t always take the most direct path from your device to its destination. It may pass through several routers or networks, each adding a small delay. This “hopping” can especially increase latency over long distances.

- Transmission medium — The type of connection directly affects latency. Fiber-optic cables provide the fastest speeds and lowest latency, while copper cables and wireless connections tend to be slower due to physical limitations in data transfer.

- Hardware limitations — Routers, switches, and modems can cause latency if they are outdated or overloaded. Devices that aren’t optimized for high-speed data processing may bottleneck your connection, slowing down data transmission.

- Packet loss — When data packets are lost or dropped during transmission, they need to be re-sent, which increases latency. Packet loss often occurs due to network errors, physical obstacles, or interference in wireless connections.

- Quality of Service (QoS) settings — Some networks use QoS settings to prioritize certain types of traffic, like video calls, over others. If your activity isn’t prioritized, you may experience higher latency, especially during heavy network usage.

How to Measure Network Latency

Latency can make or break your online experience, and precise tools can reveal where delays are happening. Here’s how you can measure latency.

Ping

Ping is a basic tool available on most operating systems. It sends a small data packet to a target server and measures the time it takes to return. To use it, open your command prompt or terminal and type “ping [target address].” For example, “ping google.com.” The result shows the round-trip time in milliseconds.

Traceroute

This tool maps the path your data takes to reach its destination, showing each step along the way. It helps identify where delays occur. To run it, type “tracert [target address]” on Windows or “traceroute [target address]” on macOS and Linux. For example, “tracert google.com.” The output lists each hop and the time taken.

Time to First Byte (TTFB)

This metric measures the time from when you make a request to when your browser receives the first byte of data from the server. It includes the server’s processing time and the network latency. Web performance tools like Google PageSpeed Insights can help you measure TTFB.

Round Trip Time (RTT)

Similar to ping, RTT measures the time it takes for a signal to go from your device to a server and back. It’s a key indicator of network latency. Tools like Wireshark can provide detailed RTT measurements.

Third-Party Applications

Tools like iPerf and NetFlow Analyzer offer more detailed insights into network performance. iPerf tests bandwidth and latency between two devices. NetFlow Analyzer provides real-time traffic analysis.

How to Reduce Latency

A few smart adjustments to your network can make all the difference in reducing latency. Here’s what you can do to make your connection feel instantly faster:

- Upgrade your network hardware — Use a modern router and modem, and place them centrally in your home for the best coverage. Updating firmware regularly improves performance and can fix latency issues caused by outdated tech.

- Switch to wired connections — Use Ethernet cables for devices that need the lowest latency, like gaming consoles or workstations. Wired connections avoid interference from walls and other devices, unlike Wi-Fi, which can slow down due to weaker signals.

- Prioritize important traffic — Set up Quality of Service (QoS) on your router to give priority to high-bandwidth activities like gaming, video calls, or streaming. This ensures essential services have bandwidth when you need it.

- Limit network congestion — Reduce the number of connected devices during high-usage times, especially if streaming or gaming. To free up bandwidth, schedule large downloads or software updates for less busy hours.

- Streamline applications — Keep all software and applications updated, as updates often improve speed and efficiency. Close background apps that use bandwidth, like auto-updaters or streaming services running in the background.

- Use a Content Delivery Network (CDN) — If you own a website, CDNs cache content closer to your users, shortening data travel and reducing latency. Popular providers include Cloudflare and Akamai, which can speed up loading times for your visitors.

- Group Network Endpoints — Arrange devices in logical groups for smoother data flow. For example, group work devices separately from entertainment ones, which can improve routing efficiency and cut latency between grouped devices.

- Use Traffic-Shaping methods — Implement traffic-shaping techniques to manage data flow and prioritize essential tasks, like streaming or online gaming. Traffic-shaping reduces network congestion and keeps latency low for critical applications.

- Connect to a VPN — Sometimes, you might experience higher latency due to network restrictions or ISP throttling. If that’s the case, a VPN will mask your connection so no one can see what you’re doing online.

Factors That Affect Network Performance Besides Latency

Sometimes, fixing latency isn’t enough for a smooth connection. Here are other factors that shape network performance and how they impact your experience.

Throughput

Throughput is the actual amount of data moving through your network, often less than your bandwidth because of congestion or equipment limits. It shows how fast your connection really feels. If throughput is low, it’s a sign of a bottleneck somewhere, so monitoring it can reveal what’s slowing you down.

Jitter

Jitter is when data packets don’t arrive at a steady pace. It can make video calls glitchy or cause stuttering in games. High jitter disrupts real-time activities that rely on smooth data flow, so reducing it helps everything feel more stable and natural.

DNS Resolution Time

DNS resolution time is how long it takes for a web address to translate into the IP address your browser needs to load a site. If this is slow, pages take longer to appear. Switching to a faster DNS provider, like Google DNS, can make web browsing feel quicker and smoother.

Signal Strength and Quality

WiFi performance depends on signal strength, which drops with distance, walls, or interference from other devices. Weak signals mean slower speeds or dropped connections. To boost signal quality, place your router centrally, avoid interference, or add a Wi-Fi extender if needed.

Network Topology

Network topology is the layout of devices on your network. The more connections and “hops” data has to make, the slower it gets. Grouping similar devices together and reducing unnecessary links can make data travel faster across your network.

Protocol Overhead

Protocol overhead is the extra information added to data packets for routing and reliability. This extra data can slow down the actual information transfer, especially with larger files. Adjusting your network settings to minimize overhead can help speed up data-heavy activities.

FAQs on Latency

What does latency mean?

Are latency, ping, and jitter the same thing?

What latency is good for gaming?

Bottom Line

Now that you know what impacts your network, you’re in control of your online experience. You don’t have to settle for slow speeds. With a better understanding of latency and network factors, you can make specific changes that reduce delays, boost performance, and keep you safe online.

Balancing speed and security doesn’t mean complicated setups. By optimizing things like your network hardware and traffic flow, you can avoid latency.

Leave a Comment

Cancel