30+ Stats on Online Racism: Prevalence, Impact, and Solutions

Racism is a daily reality for many, manifesting both as subtle biases and as outright prejudice. With an increasing shift toward remote work, online courses, video games, and social media, our everyday interactions are moving more into the digital world. This begs an important question: How does racism present itself online?

This article takes a deep dive into what online racism looks like, how pervasive it is, the link between real-life racism and online racist incidents, and the far-reaching impact of online racism. Some of the online racism seen today is a product of policy choices by social media companies, corporations, and broadband providers. Coupled with laws that are slow to adapt to the online world, racism, for the most part, has been allowed to fester in the digital world.

Understanding Racism and Online Racism

Before jumping into the stats, I’ll define what constitutes online racism and establish the current digital landscape. With digital redlining practices and the unintended consequences of algorithmic bias, you’ll find the online world is already tipped against racial and ethnic minorities.

Glossary of Terms

| Race | The categorization of people into distinct social constructs based on their physical traits. It is also loosely applied to geographic, cultural, religious, or national groups. |

| Ethnicity | The categorization of people into distinct social constructs based on a shared culture, history, and ancestries. |

| Racism | A type of prejudice characterized by negative emotional reactions toward specific racial or ethnic groups, beliefs in racial stereotypes, and racial discrimination toward others. |

| White person | A person from or a descendant of the natives of Europe, the Middle East, or North Africa. Their skin is usually light-toned. |

| Black person | A person from or a descendant of any Black racial group in Africa. |

| African American | A person in the US from or a descendant of any Black racial groups in Africa. |

| Asian | A person from or a descendant of the natives of the Far East, Southeast Asia, or the Indian subcontinent. |

| Native Hawaiian or Other Pacific Islander | A person from or a descendant of the natives of Hawaii, Guam, Samoa, or other Pacific Islands. |

| Hispanic | A person from a country where the official or national language is Spanish. |

| Latino, Latina, & Latinx | A person from Latin America or the Caribbean or is a descendant of a person from these places.

Latino denotes a male, Latina denotes a female, and Latinx is gender neutral. |

| People of color | A term mainly used in the US and Canada to denote a race other than White. |

Definition, Types, and Levels of Racism

Racism can be direct (overt) or indirect (covert). Direct racism is blatant prejudice or a criminal offense based on evidence and history. Examples of direct racism include hate crimes, physical aggression, bullying, and racial profiling. Indirect racism is subtle and sometimes unspoken. It contains implicit bias or discrimination, racial stereotyping, and microaggressions. Online racism is racism played out in the digital world.

There are four levels of racism:

- Internalized racism: This is an internal belief system that is biased or prejudiced against another race or other races.

- Interpersonal racism: This is when an individual expresses their racist beliefs toward another individual or group.

- Institutionalized racism: This occurs when an institution or organization practices discriminatory or biased policies that are racist.

- Structural or systemic racism: This occurs when society as a whole, including institutions and organizations, implements discriminatory legislation and policies that are racist.

Online racism occurs due to the same reasons racism occurs in the offline world – ignorance, a belief in race superiority, and the fear that a minority group will change the status quo or compete for resources.

However, research shows that online anonymity encourages people to behave differently and sometimes even more aggressively toward others. The lack of social cues online also leads to people relying on racial stereotypes when interacting or making decisions. Moreover, the internet allows like-minded people to seek each other out, allowing people who are prone to racist ideologies to group while still being anonymous.

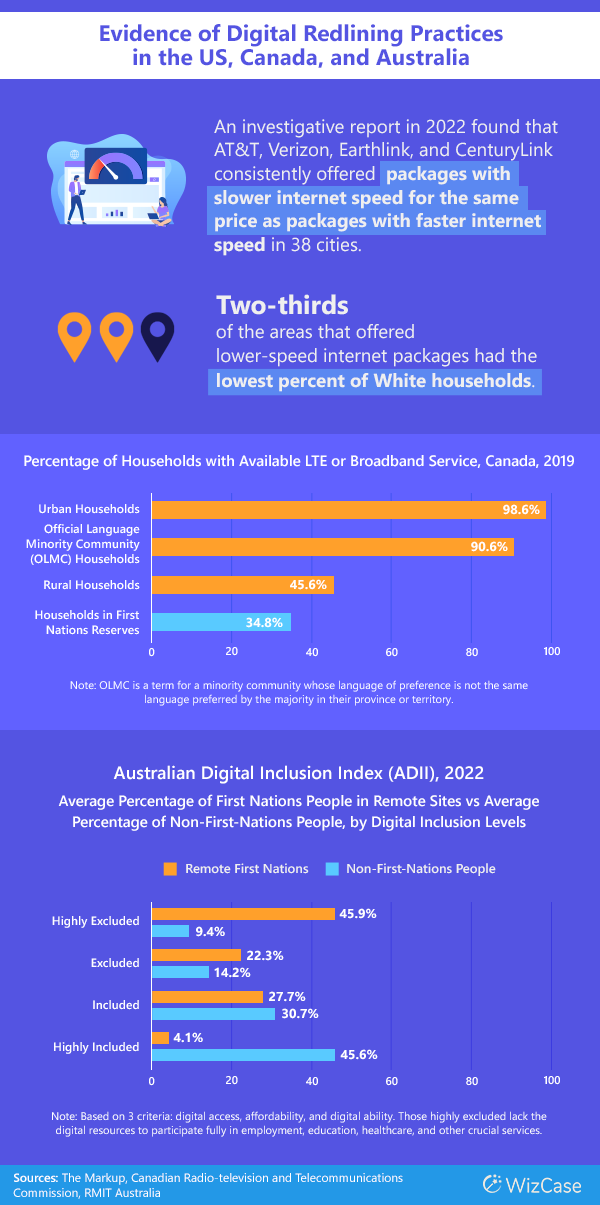

#1: Digital Redlining, a Form of Systemic Racism, Is Evident in Several Countries

Digital redlining is the deliberate lack of investment in broadband access or affordable broadband services for low-income areas. Often, the majority of demographics in these areas are people of color. Historically, the term redlining was coined in the 1960s to describe a practice in which banks denied home loans to those living in specific neighborhoods that were predominantly black.

However, more than income disparity influences digital redlining practices. One study found that broadband access in urban areas with a Black and Hispanic majority was still 10%-15% lower than in urban areas with an Asian or White majority after controlling for median household income across 905 large US cities.

Digital inequities lead to further inequality in healthcare, education, and employment. For example, during the pandemic, 1 out of 3 Black, Latino, American Indian, and Native Alaskan kids didn’t have home broadband, which is necessary for a smooth online learning experience.

Furthermore, those who have a fast and reliable broadband connection will benefit from online health services. A study found that African Americans, Asians, American Indians, Native Alaskans, and Pacific Islanders were less likely to use telemedicine than White people. Lack of broadband access also blocks opportunities such as virtual job interviews and work-from-home employment.

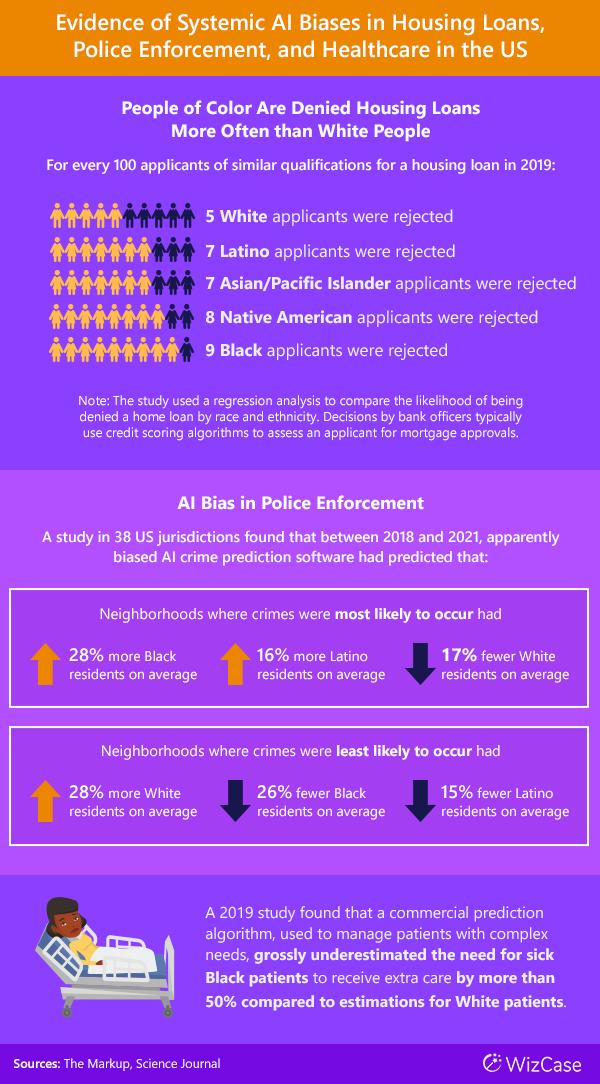

#2: Systemic Bias Is Embedded in Many AI Algorithms

According to IBM, AI bias or algorithmic bias is when human prejudices influence the training data or an algorithm’s design, leading to biased or unfair outputs. These systemic biases have been found in many AI applications across industries such as law enforcement, employment, education, and healthcare, where biased results could lead to life-or-death situations.

Human bias is introduced in several stages of AI development:

- During the data-gathering phase, the input data is used to train AI machines.

- During programming, when lines of code are written.

- During training, input data can be miscategorized, and results can be incorrectly assessed when providing feedback for the machine to learn.

AI results reflect social norms, including prejudices and bias. A report by the National Institute of Standards and Technology suggested that eliminating AI bias goes beyond fixing codes. It requires looking at the biases in the people who are driving AI development. To illustrate, Meta came under scrutiny in 2024 because its council of advisors for AI was composed of only white males.

This isn’t a minor issue, and many tech leaders have expressed their concerns. In 2022, a survey found that the majority (54%) were extremely worried about AI bias, an increase of 12 percentage points from 2019. Fully 81% wanted more AI regulation.

A Snapshot of Trends for Online Hate and Harassment Against Racial Minority Groups

In Western countries, racial and ethnic minorities are often subject to more online abuse compared to the White majority. This section looks at trends in online hate speech, harassment, severe harassment, and hate crimes against people of color.

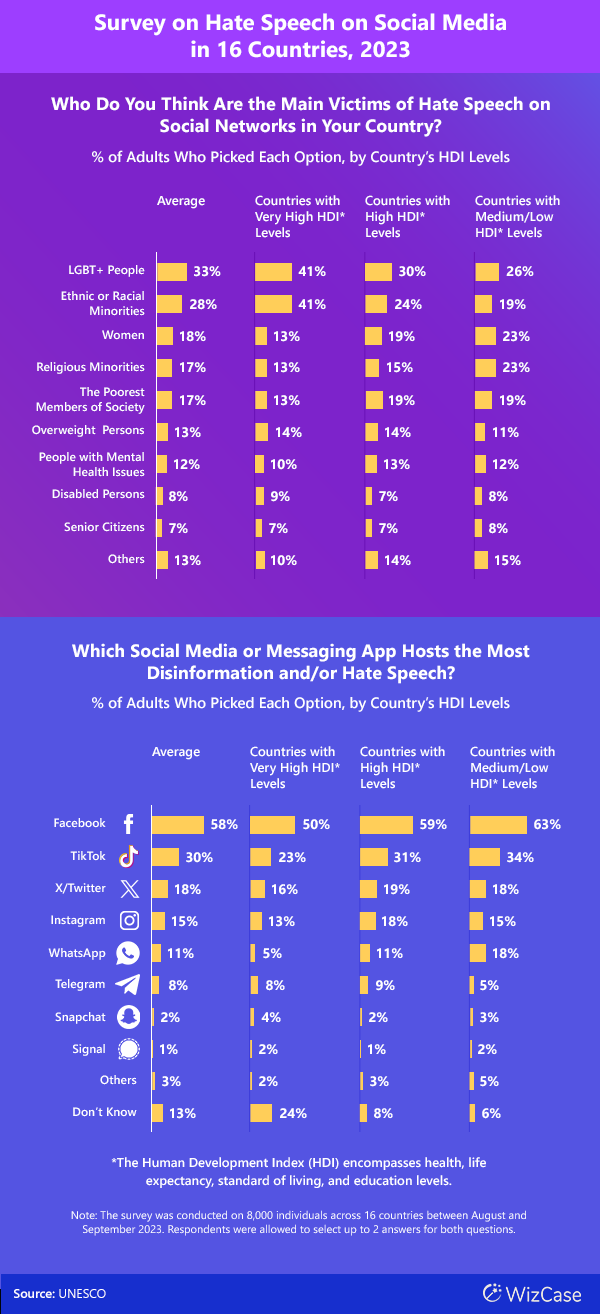

#3: Racial Minorities Are Some of the Biggest Targets of Hate Speech on Social Media

In a UNESCO survey, citizens from countries with very high Human Development Index (HDI) scores felt that racial and ethnic minorities were the most prominent targets of hate speech online. However, in countries with lower HDI scores, fewer citizens felt racial minorities were victims of hate speech. One possible explanation is that developed countries in the survey, including the US, Austria, and Belgium, have a higher proportion of White population than less developed countries.

The majority of respondents felt Facebook was the most significant online source of hate speech among social media platforms. It’s not surprising that Facebook is the biggest social media platform, with 2.11 billion daily active users globally at the end of 2023.

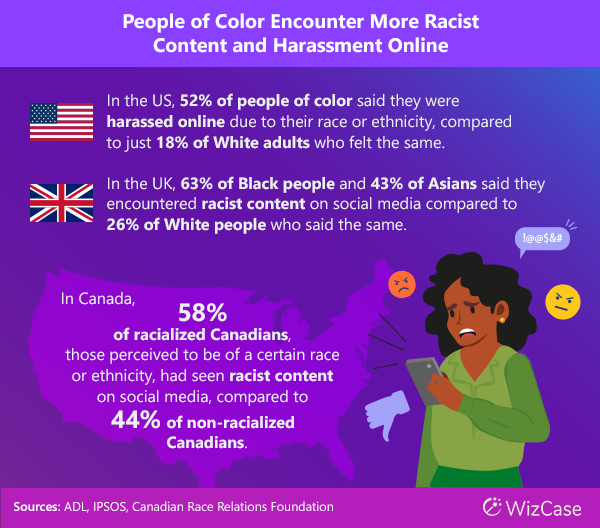

#4: Racial Minorities Are More Likely to Encounter Online Hate Due to Their Race than White People in the US, UK, and Canada

In the US, UK, and Canada, minorities felt they had experienced more online hate and harassment due to their race or ethnicity compared to White people. In the US, around half of non-whites thought they were targeted due to their race, which is more than double the percentage of White people who felt the same way in 2023.

Surveys in the UK and Canada also showed similar trends. Relative to people who identified as White, a higher percentage of marginalized racial and ethnic groups, or those who are perceived to be from those groups, said they received hate online due to their race or ethnicity.

#5: Online Hate Crime and Harassment Appear to Make Up Only a Fraction of Reported Incidents

Most occurrences of reported racism take place offline. However, many people who encounter online hate, including racial hate, may not report the issue. For example, 61% of Americans who said they were physically threatened online did not report the harassment, with nearly half saying they didn’t think the online platform would do anything about it.

Instead, victims may block a user or quit using the online platform altogether. Thus, in reality, online racism may be more prevalent than is reported.

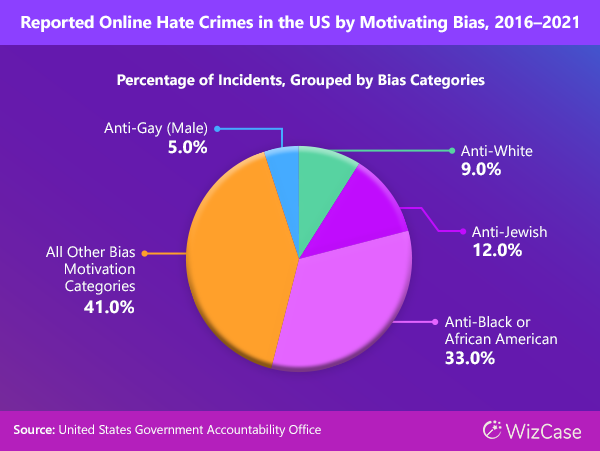

#6: Black People Are Among the Most Frequently Targeted for Online Hate Crimes in the US

In the US, anti-Black sentiment is prevalent online, representing 33% of the bias motivation out of the total reported online hate crimes. White people were the biggest group of offenders (42%) when the perpetrator’s race was known. In 47% of the crimes, the offender’s race was unclear. The majority (89%) of online hate crimes reported were intimidation.

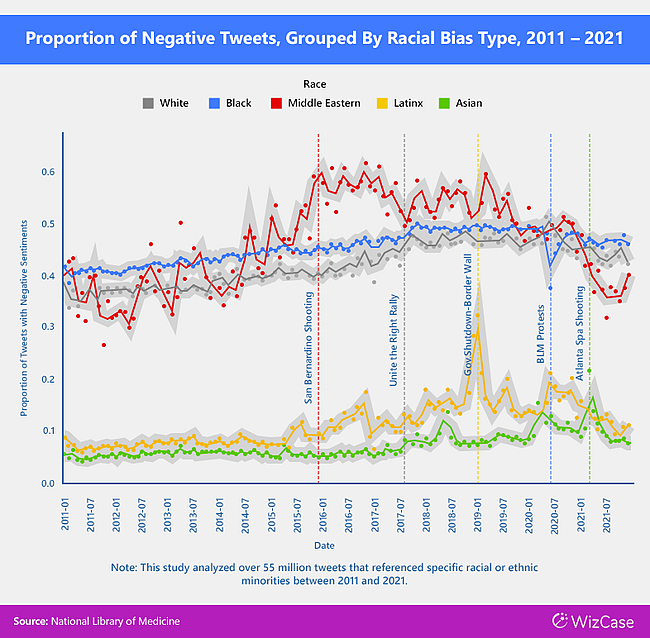

#7: Racist Tweets Increased Between 2011-2021 in the US

Research shows that between 2011 and 2021, tweets referencing Black people had the highest proportion of negative sentiment compared to other races. However, between 2016 and 2019, the proportion of negative tweets referencing Middle Easterners surpassed anti-Black tweets. The study postulates that this could be due to the rise of Islamophobia, with the 2015 San Bernardino shooting by Pakistani Muslims further fueling online hate.

Tweets with negative racial sentiments increased by 16.5% at the national level for the decade sampled. Idaho, Utah, Wyoming, Vermont, and Maine experienced the most significant increase in negative sentiment tweets.

Direct or Overt Online Racism Against Specific Race and Ethnic Minorities

Racial minority groups are often victims of hateful incidents online, with certain groups more vulnerable than others. In this section, I present several stats on direct racism, including hate speech and cyberbullying, that’s specific to Black, Asian, Jewish, Muslim, and Indigenous people.

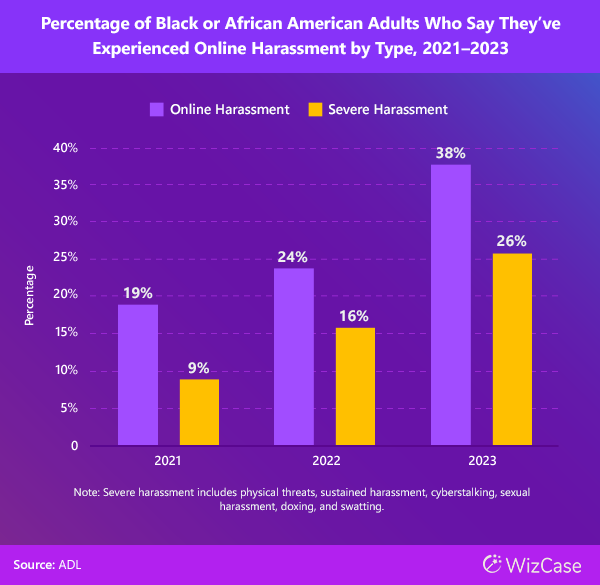

#8: Online Harassment Against Black People Has Been Increasing

Although more than 10 years have passed since the beginning of the Black Lives Matter social movement, Black Americans are still one of the biggest targets of online racism. Attacks against them have increased between 2021 and 2023, and in 2023, more Black people (38%) experienced online harassment compared to other racial or ethnic groups, such as White Americans (34%), Hispanics, and Latinos (30%).

Additionally, 26% of Black people say they have experienced severe online harassment, higher than the overall average of 18%. Severe harassment includes cyberstalking, sexual harassment, physical threats, doxing, and swatting.

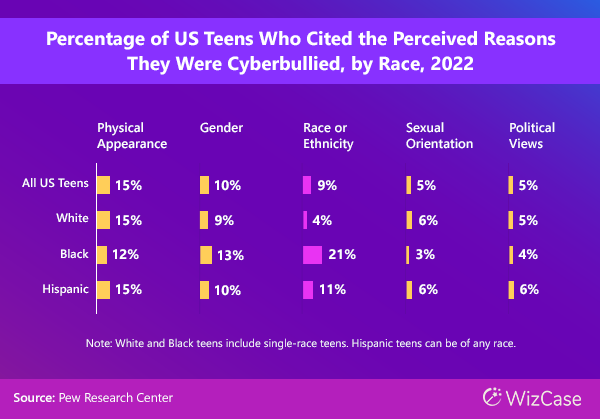

#9: Black or Hispanic Teens in the US Are More Likely to Be Bullied Online Due to Their Race

Teenagers of color feel less safe online due to other people’s reactions to their race. In a survey, more Black teenagers say the biggest reason for the cyberbullying they experienced was due to their race, more than double the average percentage of all teens who said so. The majority of Black (70%) and Hispanic teens (62%) consider cyberbullying to be a major problem for them, compared to a lower percentage of White teens (46%).

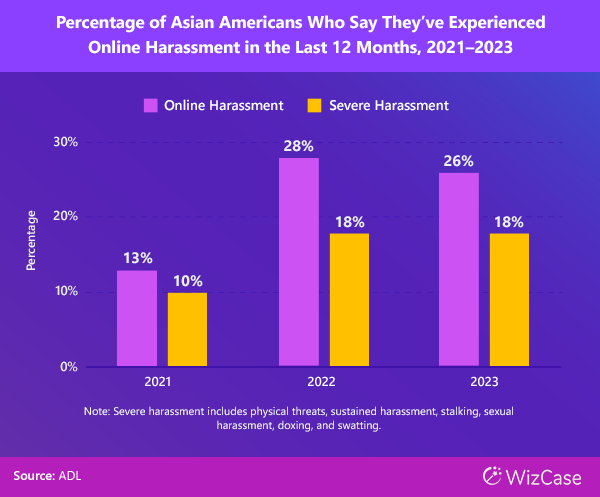

#10: Anti-Asian Sentiment, Heightened During the Pandemic, Seems to Have past Its Peak

Anti-Asian hate, which increased during the COVID-19 pandemic, seems to be declining from its peak in 2022. One of the reasons for flaming the online hate was repeated references by then President Donald Trump to the COVID-19 virus as the “Chinese virus” in 2020.

Nevertheless, 57% of American Asians still see racial discrimination as a big issue for them, according to a survey in 2023. 63% also believe that there’s too little attention being paid to addressing racism concerns among American Asians.

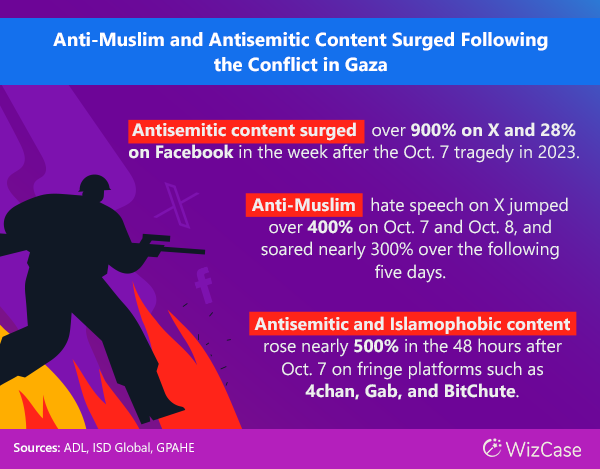

#11: Online Hate Against Muslims and Jews Surged Following the Israel-Hamas Conflict

Anti-Muslim and antisemitic content exploded online following the conflict between Hamas and Israel that started on October 7, 2023. X, formerly known as Twitter, was one of the worst platforms for such hate content. Fringe platforms, where extremism tends to proliferate, also saw a jump in online hate speech.

Since then, the majority of Jews and Muslims in the US feel racism against them has increased, according to a Pew Research Center survey released in early 2024. 89% of Jews and 70% of Muslims said they have been discriminated against in the wake of the violent conflict.

Both Jewish and Muslim communities are also having a more challenging time online. In early 2024, the majority of Jewish people said they felt less safe online compared to the 12 months prior, and 41% changed their behavior online to avoid being identified as Jewish.

At the same time, the majority of Muslims and Jews have felt offended by something they saw on the news or social media. Roughly a quarter said they stopped talking to someone in person or unfollowed or blocked someone online because of what the person said about the conflict.

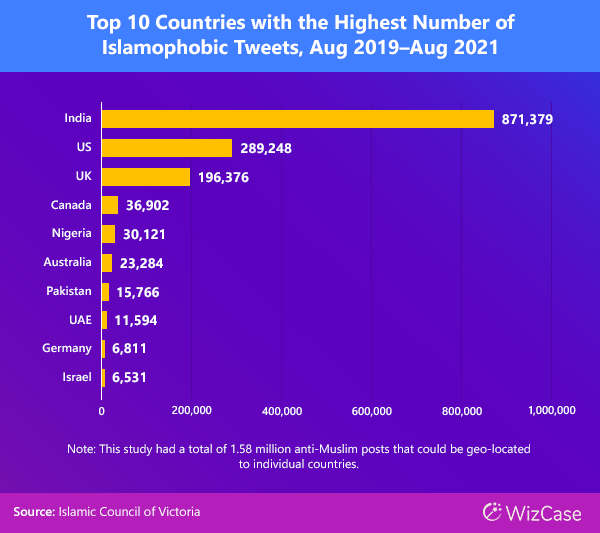

#12: The Majority of Islamophobic Tweets Came from India, the US, and the UK

Prior to the Israel-Hamas conflict, a report found that the majority of anti-Muslim tweets were from 3 countries: India, the US, and the UK. India had, by far, the highest amount of such tweets, constituting 55% of negative tweets in the sample. This result isn’t a surprise as the country has a long-standing history of Hindu-Muslim conflict, with multiple violent protests and hate crimes over the years.

#13: Social Media Is a Conduit for Spreading Racist Ideologies

Extremist groups that spread racist propaganda, such as neo-Nazis, white supremacists, and jihadists, are using social media and messaging apps to spread their ideologies and indoctrinate new members.

Algorithms that determine what people see in their social media feeds are optimized to serve content that maximizes user engagement. Thus, content with a high engagement is likely to be shown in many people’s feeds.

Troll farms, run by organizations seeking to promote their propaganda through content, can manipulate social media algorithms to spread ideologies or influence public perception. For example, internal Facebook research found that content from troll farms reached 140 million Americans monthly in the lead-up to the 2020 US presidential election.

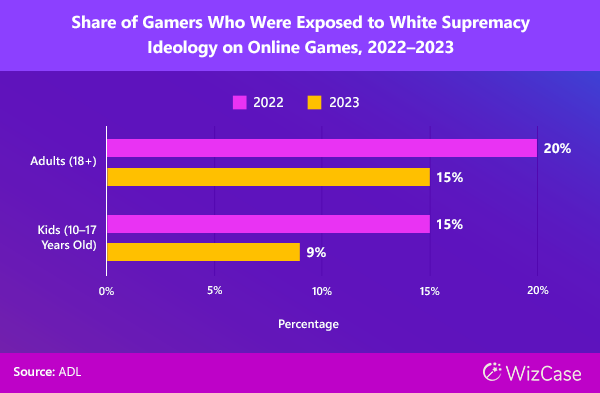

#14: White Supremacy Ideology Is Less Prevalent on Online Games

In 2023, a lower percentage of people, both adults and teens, reported encountering white supremacy ideologies in online games compared to 2022. However, 30% of those who did see such content had been exposed to it on a weekly basis. For teenagers, online gaming seems to be a safer activity compared to social media, where young people have encountered extreme pro-white ideas the most.

A report by the Institute of Strategic Dialogue (ISD) that focused on extremist movements in the UK’s online gaming space found limited evidence that these groups were using games to recruit new members. Rather, online gaming is a space for them to play games and make new friends, just like millions of other gamers.

#15: In Australia, Indigenous People Encounter Online Hate Content Regularly

In Australia, the majority of First Nations people, a term for the indigenous Aboriginals and Torres Strait islanders, said they encountered racist content on social media frequently. Although the majority said they had reported such content to moderators, such action may not yield results. In 2023, Meta dismissed complaints from Aboriginal leaders that the company wasn’t resolving reports of racism, as it deemed the content met community guidelines.

#16: Athletes Are Targets of Online Abuse and Harassment Due to Their Race

Public figures already face more scrutiny than the average person, and it’s worse if they are persons of color. Athletes competing in global sporting events, such as the FIFA World Cup and the Olympics, have to contend with online abuse, a significant proportion of which is motivated by racial prejudice.

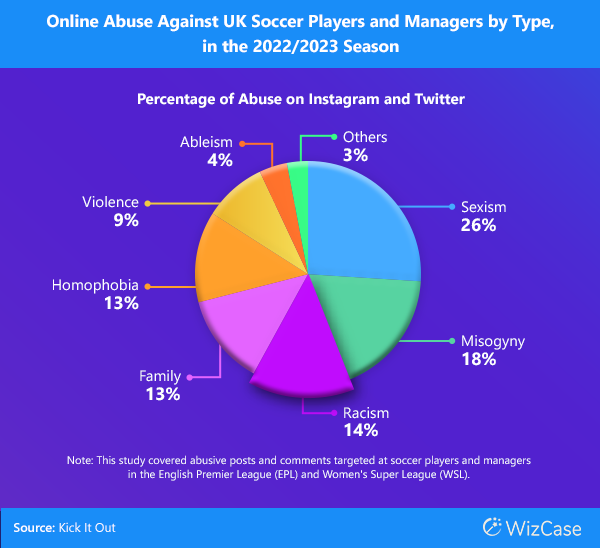

#17: In the UK, Online Racism Against Soccer Players Is Growing

Racial hate is growing on social media against English footballers, according to Kick It Out, an anti-racism organization based in the UK. Online racism cases grew three-fold in the 2022/2023 season to 112 from 37 reported cases in the season prior. Islamophobic posts grew six-fold over the same period. For Black players in the men’s English Premier League (EPL), considered the most-watched league globally, over 75% of online abuse was racist.

#18: Women of Color in Politics Bear the Brunt of Twitter Hate

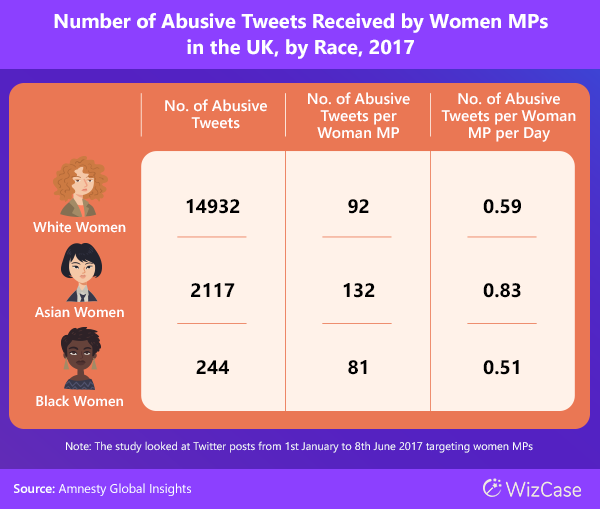

Black and Asian women MPs in the UK Parliament receive a lot of hate online compared to their White female peers, and Asian women MPs have it the worst on X.

Research by Amnesty International shows that Asian women received an average of 132 abusive tweets per woman, which is 1.6 times more than Black women MPs and 1.4 times more than White women MPs. For context, the racial representation of women MPs in 2017, when the study was done, was 89% white, 8.8% Asian, and 2.2% Black.

Indirect or Covert Online Racism: A Look at Implicit Bias, Stereotyping, and Lack of Representation

People of color may not realize that they are subject to implicit bias online, when job hunting, studying, or in their daily interactions. They are also less likely to see non-whites represented in online content and may encounter the occasional content that is culturally insensitive, or that reinforces racial stereotypes. This section covers several examples of indirect racism happening online.

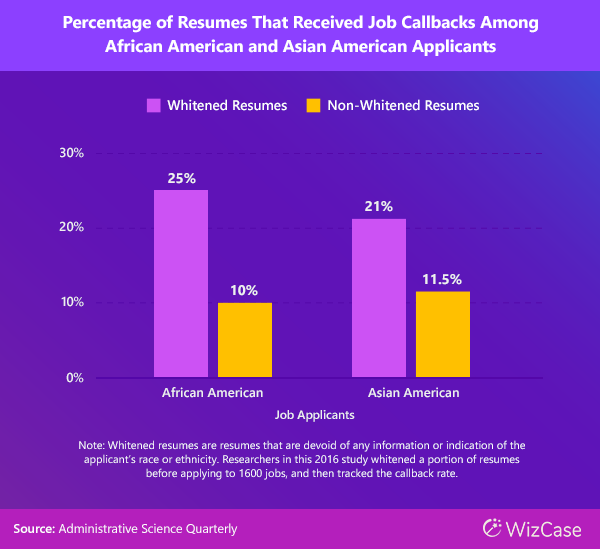

#19: Black People and Asians Are Less Likely to Get a Job Callback

Racial bias seems to extend to online hiring processes. Such biases would make it harder for racial minorities to find jobs, further widening the income disparities between races.

Research shows that “whitened” resumes, in which it was hard to discern the applicant’s race, were up to two times more successful in getting a callback. This result was found after researchers created resumes for Black and Asian applicants and then “whitened” a portion of them before submitting them to 1600 entry-level jobs in 16 US cities.

#20: People of Color Experience More Discrimination on Dating Apps

Online dating is more complicated for racial minorities, especially for multiracial people, based on a UK survey. Overall, 1 in 3 UK adults said they have experienced racial discrimination, unsolicited fetishization, or microaggressions.

Another talking point for online dating discrimination is the presence of AI bias. According to a paper by Cornell University researchers, dating apps that allow race filtering and algorithms that favor pairing the same races are reinforcing racial biases. With 3 in 10 US adults now using a dating app or site, biased AI results could change the course of people’s lives in finding partners and building families.

#21: Non-White Students Face Implicit Bias from Educators in Online Settings

In an experiment by Stanford University, researchers created fictional student accounts with names that people would easily identify as either White, Black, Indian, or Chinese and posted comments using those accounts across 124 massive open online courses.

Overall, course instructors responded to 7% of comments posted. However, for accounts representing white male students, the response rate was almost twice as high, at 12%. With more institutions offering online courses and more students willing to study online fully, this bias may hinder students of color.

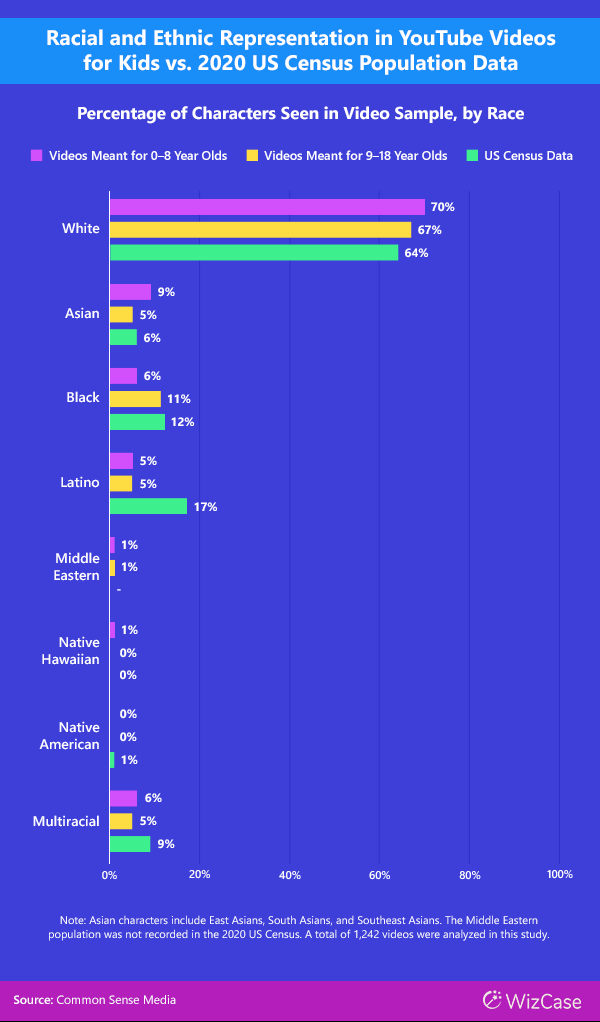

#22: YouTube Videos Are Lacking in Racial Representation

YouTube videos, watched by many younger audiences, lack diversity, according to a report by Common Sense Media. Among racial demographics, Latinos were the most underrepresented on YouTube, based on an analysis of over 1,242 videos meant for kids, totaling 344 hours of content. Latino characters made up only 5% of the total characters compared to 17% of the Latino population in the 2020 US Census.

In videos meant for young kids under 9 years old, 62% didn’t feature any Black, Indigenous, or people of color (BIPOC) characters, and 10% showed shallow or stereotyped portrayals of BIPOC characters. In videos meant for tweens and teens, the use of the N-word, racial stereotype depictions, and jokes that were racially themed appeared in 1 out of 10 videos.

#23: Generative Text-to-Image AI Can Be Racially Biased

Text-to-image AI, a type of generative AI technology that produces images from prompts, has been found to produce racially biased results. This is a long-standing issue, as AI models are trained using existing content that often lacks diversity and portrays racial stereotypes that are propagated further through AI outputs.

A 2023 Bloomberg investigation on Stable Diffusion consistently found evidence of racial bias when generating images for different professions. But correcting biased results isn’t always a simple fix. In early 2024, Google received criticism when its Gemini AI displayed several incorrect depictions of historical figures, such as drawing Nazi figures as people of color. The results were seen as an overcorrection in addressing the lack of diversity in AI outputs.

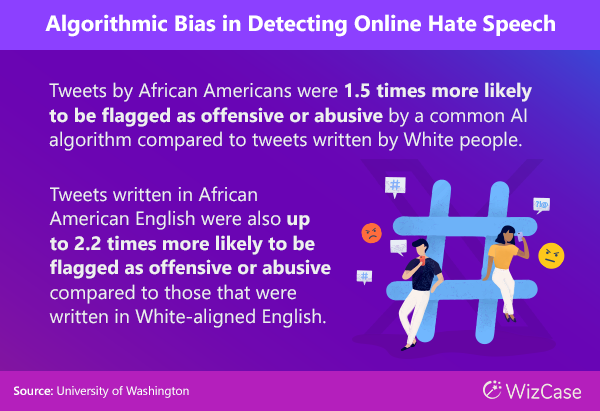

#24: AI Algorithms Can Be Racially Biased When Detecting Online Hate Speech

An AI hate speech detection model is more likely to label tweets by Black people or written in Black American dialect as harmful compared to if it was written by White people or in standard American English dialect.

This is due to the bias already present in the training dataset that humans annotated. However, when the annotators were made aware of racial dialects and context, they were less likely to label tweets by Black people as toxic, and this filters through to the AI results.

The Impact of Online Racism

Words can be dangerous even from virtual strangers in the online world, as evidence shows they can affect a person’s mental health and confidence. This section looks at the impact of online racism on individuals and the far-reaching consequences that further widen racial inequities.

#25: Online Hate Speech Could Trigger Real-Life Hate Crimes

A US-based study found that a rise in online hate speech is associated with an increase in hate crimes committed against racial minorities. A study in the UK yielded similar results when analyzing the link between hate tweets and hate crimes in London over 8 months. The reverse also appears to be true. In a separate study, offline events such as the killing of George Floyd that started the Black Lives Matter movement led to an increase in online hate speech.

While a correlation doesn’t determine causation, the clear implication is that what happens online can have real-world consequences and vice versa.

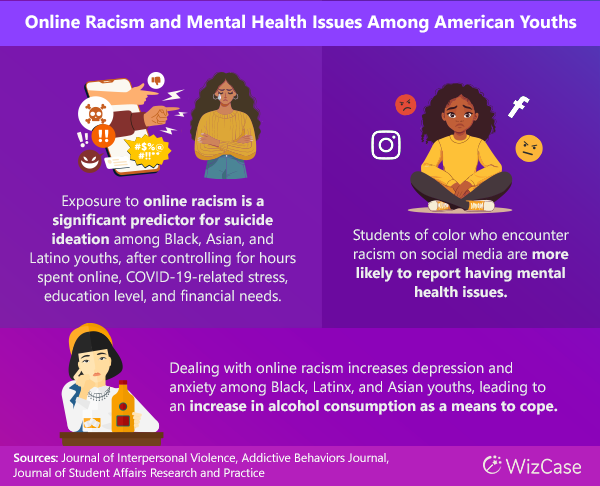

#26: Online Racism Is Linked to Mental Health Issues Among Youths

Multiple studies have shown that exposure to online racism is linked to either developing or intensifying mental health issues such as suicidal ideation, depression, and anxiety among youths. These studies prove that online racism affects youths in the same way racism does in the real world and that young people are particularly vulnerable due to the amount of time they spend online. The impact would likely shape their identities and values as adults.

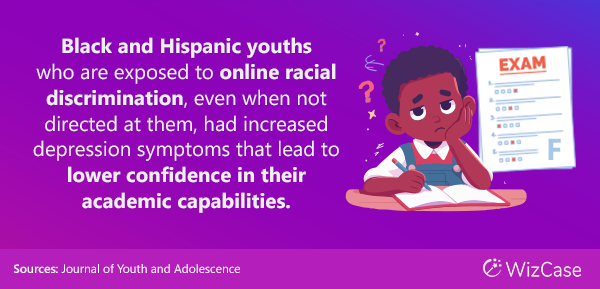

#27: Online Racial Discrimination Damages Academic Confidence and Motivation

It’s not just direct mentions and personal conversations that youth minorities have to be wary of. Generic racist online content can negatively affect Black and Hispanic teens, which in turn hurts their academic confidence, according to a 2022 study.

An earlier study in 2016 recorded the same result, showing that increased exposure to online racial discrimination led to a decline in academic motivation among African American and Latino teens, even after controlling for the educators’ biases and average baseline grades.

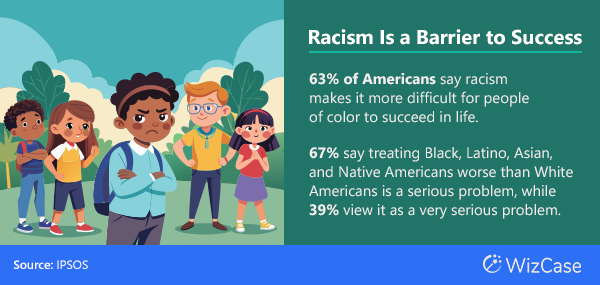

#28: Majority Thinks Racism Makes It Harder to Achieve Success in Life

Racism presents a barrier for racial minorities to succeed in life. Online racial hate also plays a hand in this, with 29% of Black people saying they have been financially impacted as a result of online harassment in a 2024 survey.

Racial inequality affects the income, wealth, health, and lifespan of minorities. According to the US Census Bureau, Black households had the lowest median annual household income in 2022, at $74,580, 29% less than the median annual household income of all races. In Q2 of 2022, 75% of White households owned homes, while only 45% of Black households, 48% of Hispanic households, and 57% of households of other races owned their homes.

Income and wealth form part of the social determinants of health, which are non-health factors that determine an individual’s health outcome, as defined by the World Health Organization. During the COVID-19 pandemic, death and hospitalization rates for Black, Latinx, and Native American people were higher compared to White people. The average life expectancy of Black Americans is also 4 years less than White Americans.

#29: The Economic Cost of Racism

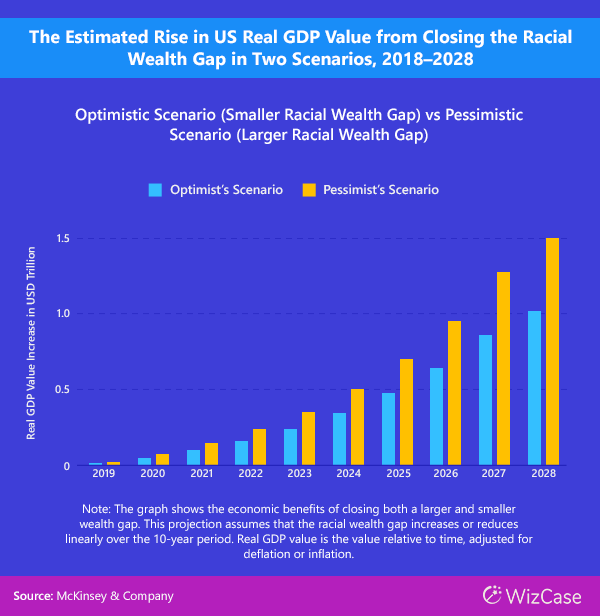

Racism is potentially hurting economies.

A McKinsey study estimated that the racial wealth gap produces a “dampening effect on consumption and investment,” equating to $1 – $1.5 trillion between 2019 and 2028. Closing this gap will add an estimated 4%-6% extra to the projected GDP in 2028 and increase the per capita GDP by between $2900 and $4300.

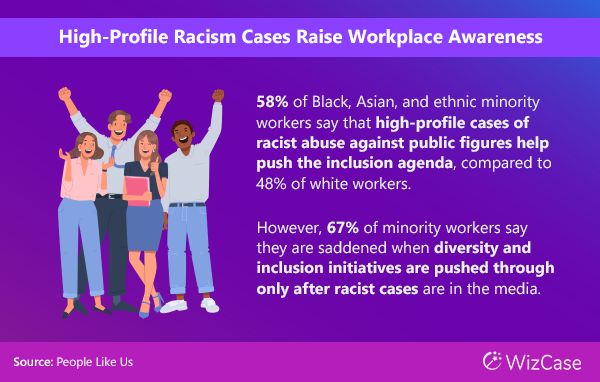

#30: High-Profile Racist Cases Online Help Push Workplaces to Be More Inclusive

High-profile racist incidents, including online cases, help raise public awareness and could lead to positive changes.

Following England’s loss in the Euro 2020 final, Black soccer players were subject to heavy online abuse. A survey found that the incident helped raise awareness and, in some cases, pushed workplaces further in the direction of equality, such as hosting discussions around inclusivity.

Tackling Online Racism

The solution to online racism, like racism in general, is neither straightforward nor easy. The fast-evolving online world means jurisdiction and legal responsibilities are often confusing or undefined. What’s clear is that people believe governments and online companies have a bigger role to play in eliminating online racism. This section outlines the actions and policies that have materialized so far.

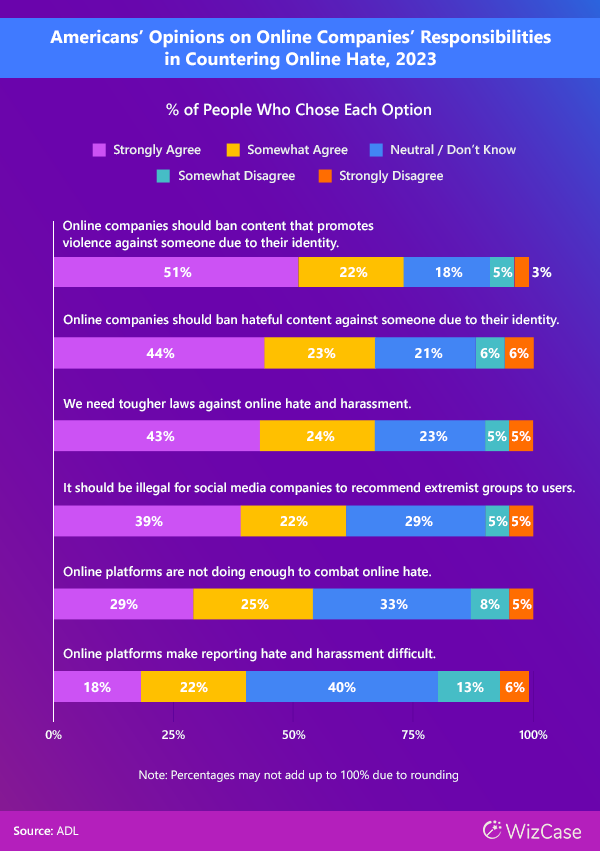

#31: Majority Wants Tougher Laws and for Online Companies to Do More

In a 2023 survey, the majority of respondents agreed that stricter laws were needed for online hate and harassment and that online companies are responsible for banning hateful content that targets an individual or group’s identity.

Similar results were seen in a separate survey of 16 countries. Respondents feel that governments, regulatory bodies (88%), and social media platforms (90%) are all responsible for dealing with hate speech and online disinformation.

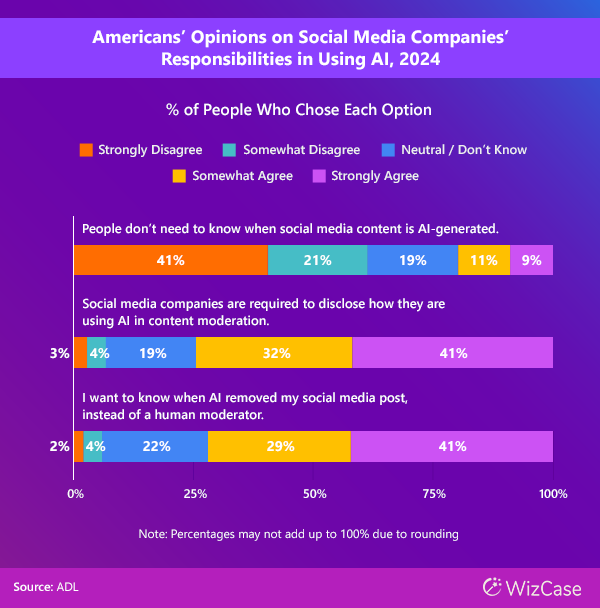

#32: Social Media Companies Should Be Liable for Harms Caused by AI

Most people believe that social media platforms hold legal responsibility when their AI algorithms cause real-world damage. In a 2024 survey, 73% of people believed that social media companies should disclose how they are using AI for content moderation, while 62% would like to know when social media content is AI-generated.

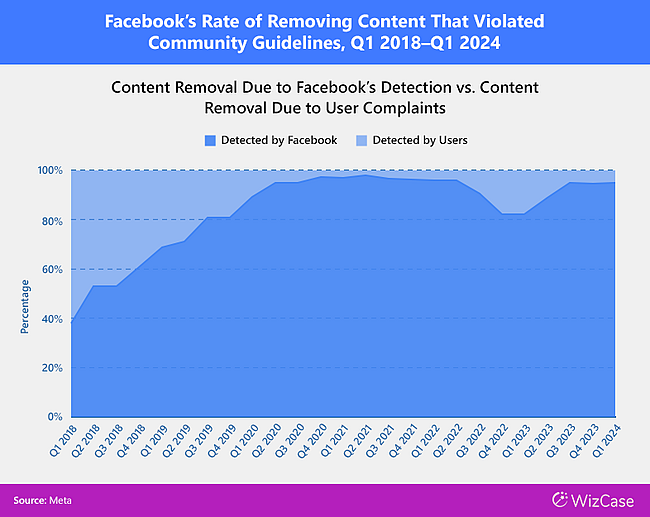

#33: Facebook and YouTube Are Trying to Clean Up Their Acts

Facebook and YouTube are trying to be more proactive in eliminating hateful content on their platforms, while Twitter is lagging in such efforts.

Facebook claims that it found and acted upon 94.5% of hateful content in Q1 of 2024 before users saw it, compared to only 23.6% at the end of 2017. The social media behemoth had previously faced criticism that its AI algorithm to detect hate speech was racially biased against minorities.

96.4% of YouTube videos that were removed due to violations of the platform’s community guidelines in Q1 2024 were detected by the company’s automated flagging system. These videos included violent and hateful content against racial minorities and religious groups. In comparison, 79.6% of videos removed in Q4 2017 had been automatically flagged, while the rest were due to reports by users, government agencies, and organizations.

A report by the Center for Countering Digital Hate (CCDH) in 2023 found that X had failed to act on 86% of reports on hateful content, including tweets promoting antisemitism, anti-Black, neo-Nazis, and white supremacy. Musk sued CCDH as a result, but the presiding judge dismissed the lawsuit in March 2024.

Previously, ethnic and racial slurs had increased on X, around the time Musk took over the platform when examining the top 20 tweets between October 2022 and January 2023.

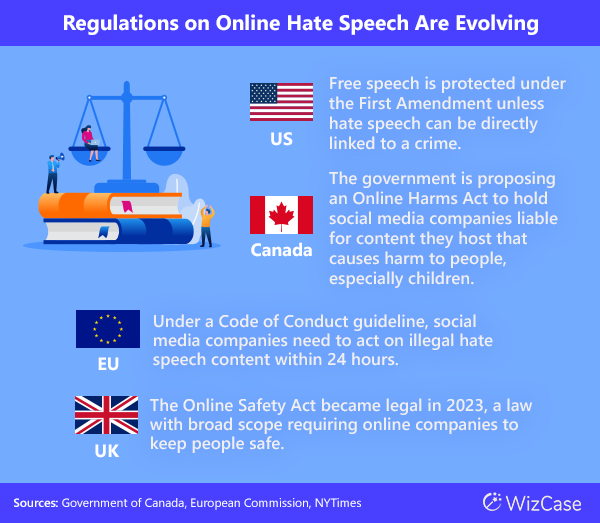

#34: Efforts Are Underway to Better Regulate Online Hate Speech

With the rise in hate speech online, including racist content, there are growing calls for governments to strengthen the laws and for companies to be made more accountable for the content posted by their users.

Europe and the UK have made significant progress with new legislation enacted in 2023. The UK’s Online Safety Act allows fines of up to 10% of a company’s global revenue. The EU’s Digital Services Act, a law combating a wide range of illegal content online, including hate content, imposes a maximum fine of 6% of a company’s global revenue. Meanwhile, Canada is likely to follow suit with a bill in Parliament that protects people from online harm.

The Bottom Line

Some of the statistics presented here on online racism may be alarming and disappointing. However, discussing these facts can help raise awareness of racial inequalities and the lack of diversity, emphasizing the critical need to tackle digital racism.

Online racism affects both individuals and communities and can even hinder an entire country’s economic growth. More must be done to ensure racism doesn’t increase in the digital world. This involves improvements in legislation, corporate policies, technology, education, and awareness.

Ultimately, you can contribute to combatting online racism by speaking up against racial discrimination, reporting racist incidents you encounter online, and supporting organizations or causes that are working toward an inclusive digital world.

Attention: WizCase owns the visual and written content on this site. If our cybersecurity insights resonate with you and you wish to share our content or visuals, we ask that you credit WizCase with a link to the source in recognition of our copyrights and the diligent work of our expert cybersecurity researchers.

Leave a Comment

Cancel