How Inclusive Is Social Media? A Comparative Research Study

Increasingly, fears of and reactions to “cancel culture” and censorship on social media make headlines. Conservatives and liberals alike have concerns about representation across different platforms. Conservatives claim they are being silenced, while liberals claim that hard-right accounts are being inappropriately boosted. Both sides are saying that more representation is needed for their group.

So, how inclusive is social media really? Whose claims are right? Whose voices are being lifted up and whose are being silenced? For many, myself included, this is a heated debate they feel emotionally invested in. They want these questions answered, they want the causal factors identified, and they want solutions.

So, to address this topic as objectively as possible, I teamed up with my colleagues at WizCase to create fresh social media accounts. With a VPN connection to the US, we were also able to control for location and see what new users are exposed to before algorithms kick in and take over.

Our results showed differences between platforms; certain demographics appear more than others. There were also some demographics that were notably absent, which is an issue of visible vs non-visible traits. For example; some religions have common forms of religious dress, but not all, and some disabilities are visible but some are not. Likewise for gender and sexuality. For gender and ethnicity, we relied on visual cues. For religion, disability, and sexuality, we had to rely on the topics of posts.

This is to say that we worked hard to be as unbiased as we could, and yet it is likely the case that some populations were indeed represented and there was no way for us to tell. In fact, there would be no way for a casual observer to tell either, as this would require knowledge of the content creator’s background.

Although our findings did not show that any groups are being actively silenced, some voices in our data were indeed being boosted.

Results: What We Found

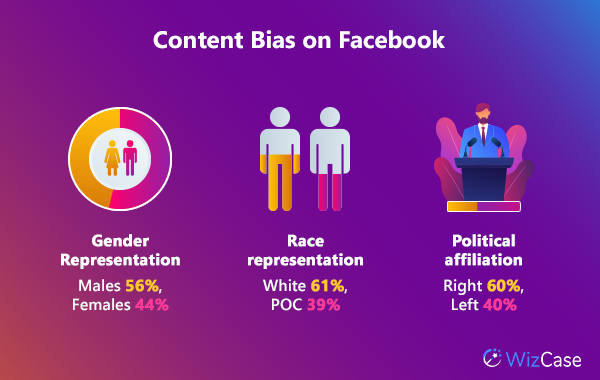

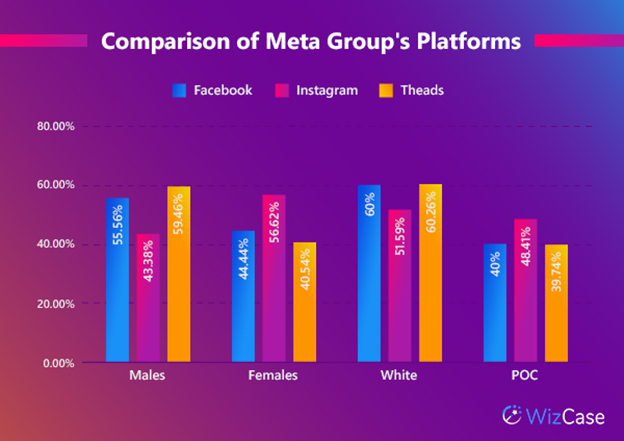

On the whole, we found most of the platforms to be dominated by depictions of men, mostly White. The extent varied across platforms, and Instagram was a notable departure. In this section, I’ll break down our demographic findings and discuss notable numbers by platform.

For context, we analyzed 100 posts on most platforms (Facebook, Threads, X, TikTok, and YouTube). On Instagram, we analyzed 135 data points because we included the 3 ways in which this platform serves content (“Suggested for you,” Reels, and Explore). A total of 35 accounts, plus 50 Reels, and 50 posts in the Explore section.

Editor’s Note: While looking at posts on X, we saw a notable departure from the types of content found on the other platforms. The other platforms showed us almost exclusively content that could be considered appropriate for all users. X did not, leading us to define the following types of content as “harmful”:

- Graphic violence: shootings, deaths, and beatings

- Political propaganda: items that appear to be news but are biased towards one group and provably false

- Sexually explicit: offering nude photos

- Negative stereotypes about any group

Although the term “harmful” can be read as subjective, the categories above are all reportable on X and thus can be considered violations of X’s content policies.

Because of Facebook’s strict protections against fake accounts, any new accounts our researchers created via a computer using a VPN were immediately banned.

This means that new accounts need to be verifiably real, and the lack of bot accounts may help explain our findings on the political spectrum: we were served mostly neutral content and a balance of political views from both the left and the right.

At first glance, this was surprising because Facebook has been accused of being home to “fake news” and propaganda. However, our research suggests that content that falls into those categories is likely served based on a content algorithm and not indiscriminately to all users.

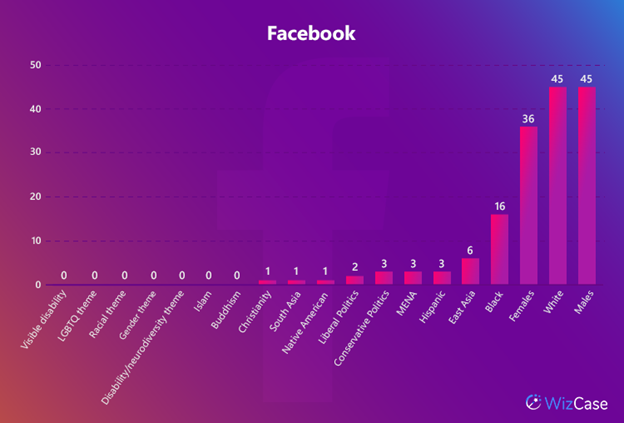

We found the content to be suitable for all ages — we didn’t see any explicitly violent or sexual content. We were also served no content on any diversity themes such as disability, LGBTQ+ topics, race, or gender.

Although I wouldn’t call Facebook’s content “inclusive” because of its lack of diversity themes, I would call it the most balanced in our research since the content we were served was the closest to 50/50 across all gender, race, and political ideology metrics. One thing to note is that around 60% of people in the US are considered White, so in this study, Facebook accurately represented the population.

Jump to Facebook’s complete data set

After creating a Facebook account, setting up an account on Instagram was relatively easy; Instagram used our Facebook account to verify our identity. It’s important to note that this may have introduced some bias in our data. However, since we didn’t follow or like any posts on Facebook, the influence should be minimal.

The platform asked us to choose some accounts to follow to get set up. We looked at the content of these accounts to add them to our data but didn’t follow any of them.

Over 75% of the accounts that were shown to us while were setting up our account were of White Americans. One notable exception was a Black American female rapper whose account at the time had only 54 posts, many of which were sexually suggestive in nature.

This account was not the only concerning content we were served — in the explore section, we were served videos of very young women (possibly too young to be on the platform) who were being complimented on their looks in the comments.

In the reels section, we saw a number of violent videos. Some of these seemed to be created to bypass some type of content filter, as they featured videos of people getting hurt over the top of videos of the ocean.

Similar to Facebook, we saw no depictions of disability, nothing that presented a discussion on racial or LGBTQ+ issues, and nothing explicitly political.

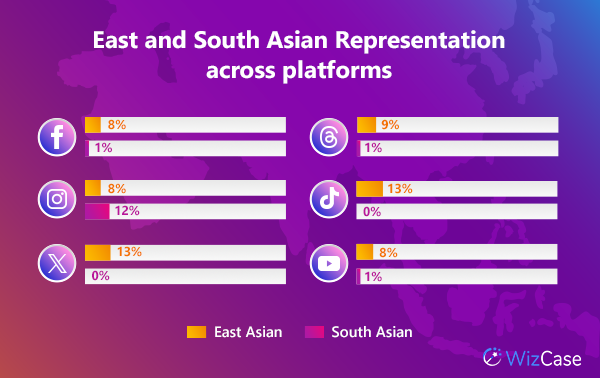

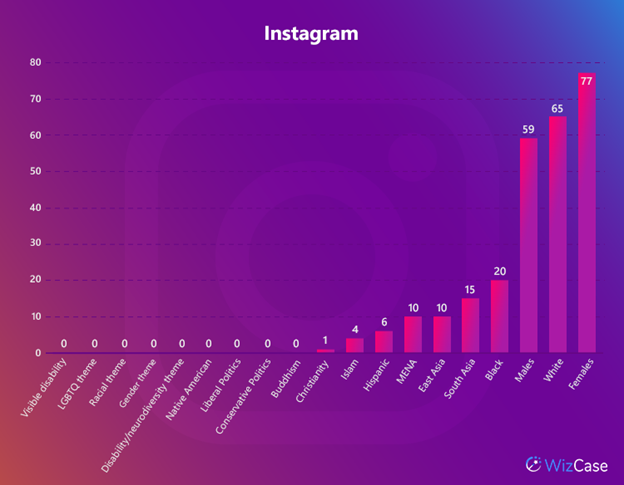

In terms of ethnicities depicted, the breakdown was roughly similar to Facebook’s, with Whites being the most largely represented group, but we did notice that East and South Asian content was very heavily represented — enough that the researcher double-checked that their VPN was still connected to the US.

This prompted us to look at Instagram’s demographics and according to Meta, Instagram’s largest user base is in India. In fact, there are twice as many active users in India than there are in the US (its second-largest user base).

Jump to see Instagram’s full data set

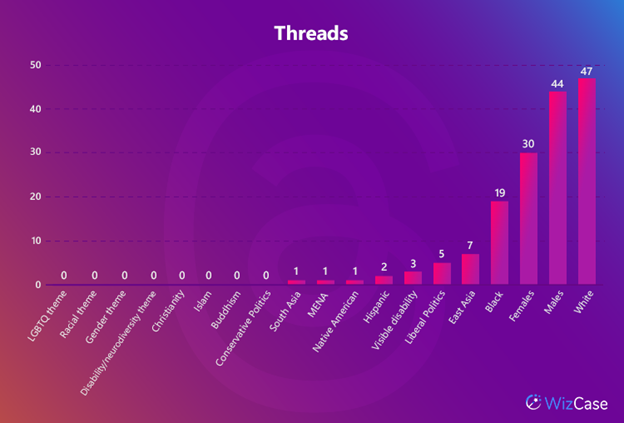

Threads

The content we were served on a fresh Threads account was largely age-appropriate — lots of animals, influencers trying to get new followers, and posts from official brands. We only saw a handful of political posts and, unlike other platforms, they were all left leaning. This seems pretty intuitive as the platform was created as a direct response to Elon Musk’s purchase of Twitter.

Although the lack of anything we were looking for may seem notable or anomalous, the same thing happened when I used Threads. Even though I was engaging with content on the platform and actively using Instagram and Facebook, most of the posts I was served were from influencers promoting a product or trying to farm engagement.

In terms of demographics, one interesting point we noticed was that Threads’ data was close to identical to Facebook’s, but not Instagram’s. As all three platforms are linked, and you must have an Instagram account to create a Threads account, it would follow that Instagram and Threads would be closer — not Threads and Facebook.

Jump to see Threads’ complete data set

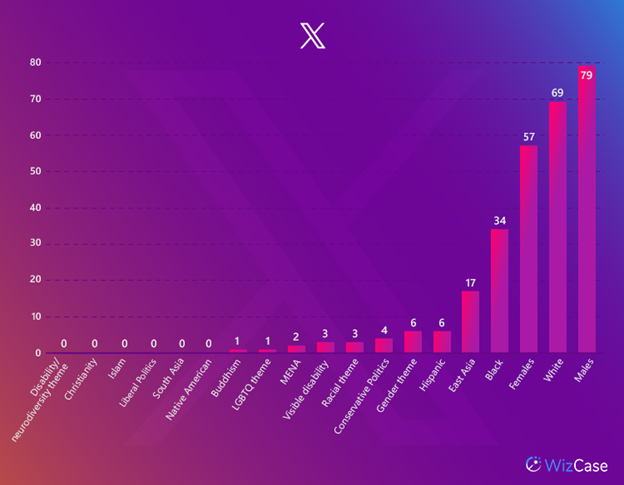

X (Formerly Twitter)

Based on my personal experiences with X, I wasn’t surprised to find that the content we were served in our research was the most disturbing. Initially, we were served posts based on the interests we selected when we set up the account (music, dance, and science). That didn’t last very long — our For You feed quickly began to change.

It started with a potentially misogynistic video and quickly devolved into violence. We saw videos depicting police brutality, an officer-involved shooting, and other police violence. We saw a whole spectrum of people hurting others (including the elderly and teenagers) and themselves: tons of fights, people dying, and people committing suicide.

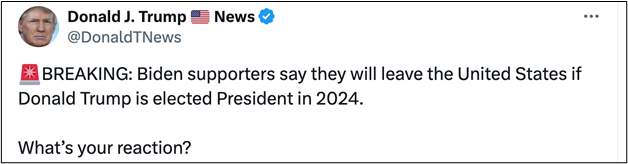

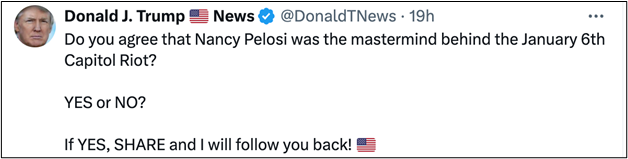

Looking at the political messages in our results, we were not served any content that could be considered liberal or representing left-wing politics. What we did see was political propaganda supporting Donald Trump.

The Tweet below was the one we were served initially. It positions itself as a news item but provides no details on who or how many Biden supporters are saying this and provides no sources for this information. After doing some of our own research, we could find no detailed information on this topic.

Note that this specific account now seems inactive, and the handle belongs to a private account.

Looking at the account’s other posts, we found the account to be part of a propaganda campaign. It has a blue check, which means that its visibility is being artificially boosted, and it’s spreading right-wing conspiracy theories:

In the years before Elon Musk bought Twitter and rebranded it to X, many American conservative voices claimed they were being silenced by the company. However, based on our findings, this is not the case now. X had the highest proportion of conservative voices from among the platforms we studied. In fact, while only 4 posts in our sample from X contained any political messaging, 100% of those were conservative.

Jump to see X’s complete data set

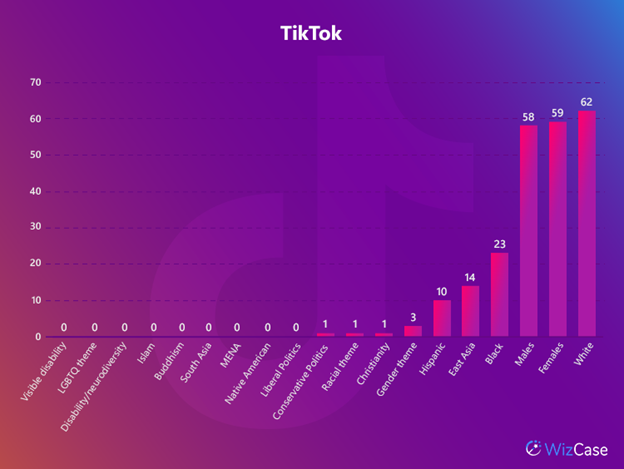

TikTok

TikTok was notably more family-friendly than X. Nearly all of the content we saw was suitable for every age group, with the majority of the videos being humorous. We also saw videos about fashion and beauty, some lip sync and music-related videos, and some about parenting.

One interesting thing that we came across was a couple of videos related to men’s mental health. We saw a girl talking about how rarely men are given compliments, featuring clips of famous men talking about their mental health struggles. There was also one of a teen boy talking about his personal struggles.

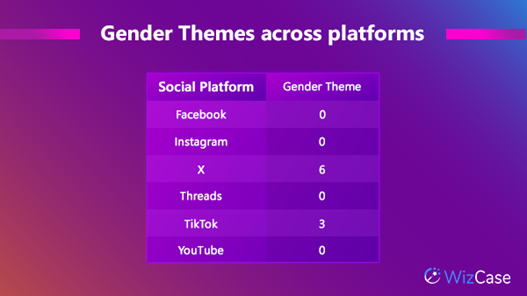

TikTok was the only platform on which we saw these discussions and the only platform besides X that showed us any topics on gender at all.

The one video we saw that wasn’t appropriate for young audiences was of a rapper talking about being attracted to minors. This video was found to be problematic for a number of reasons: the discussion itself, as well as the sometimes tacit and sometimes stated approval the statements garnered in both the video and comments.

Overall, the fact that what we were served by TikTok was generic and non-controversial didn’t surprise me. The platform heavily relies on user interaction to curate feeds, beginning with the initial content you interact with. We only scrolled for a limited amount of time and didn’t otherwise engage with any content.

Jump to see TikTok’s full data set

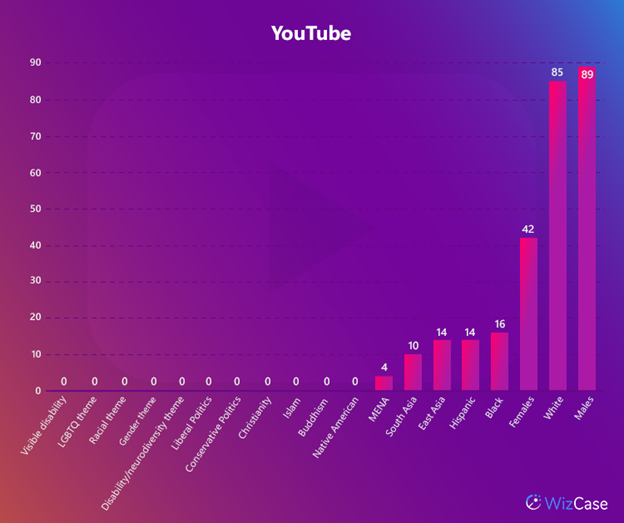

YouTube

The videos YouTube served us were similarly non-controversial for the most part. We started with a music video that was trending on several social media platforms. From there, YouTube suggested a couple of other music videos. Then there was a trailer for a game that features fantasy violence, a couple of movie trailers for violent action and horror movies, ending with videos featuring police violence.

After that, we chose a video about dangerous engineering jobs. Following our methodology, we then selected the first video YouTube recommended from a different account, which led us to educational videos about engineering and science (learning about things), followed by sensational science (looking at things), and finally, educational videos about animals.

Overall, there was nothing that we considered unsuitable for young audiences, although the argument could be made that some of the violent content we saw wouldn’t be appropriate for impressionable audiences.

Interestingly, we also didn’t see any political content or social views. Although it is possible that we would have gotten there eventually if we had continued down the police violence rabbit hole. As a matter of curiosity, I searched for a random Andrew Tate video. After watching one or two, I saw Jordan Peterson and Ben Shapiro in the sidebar. After watching a couple of those videos with political themes, then I began to get videos on that and similar themes.

What that shows is that YouTube is extremely responsive to user choice. When we viewed a random selection of videos YouTube served us with minimal user intervention, we saw a wide variety of topics — almost as if the platform was asking “Are you interested in this? No? What about this?” and so on.

Jump to see YouTube’s full data set

How the Platforms Compare

Thematically, we saw a lot of similarities across platforms — the themes we were looking for largely didn’t appear at all. However, we did see some interesting trends. In this section, I’ll compare what themes did and didn’t appear across platforms.

Ethnic diversity

We divided ethnic groups into the following categories: Black, East Asian, South Asian, Middle East & North Africa (MENA), Hispanic, White, and Native American.

We found that White people were represented more than any other group — ranging from 51-60% of all posts. The next largest group was Black people, but the largest percentage of that group was on YouTube, with just 29%.

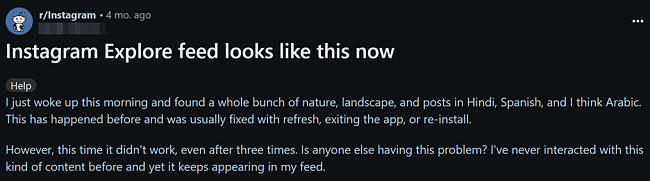

Instagram had the largest percentage of East and South Asians. At one point in our research, the researcher’s For You page (FYP) was almost exclusively content from those groups. We never learned the cause, but did find some Reddit users were seeing the same thing and were similarly confused.

X had the largest raw numbers of depictions of races other than White. However, the posts were overwhelmingly negative, so I wouldn’t consider this a win for diverse voices.

Instagram had the second highest numbers, and this was largely due to the higher amount of East and South Asian content. This raises an interesting point. On the one hand, it looks like an anomaly because of the other numbers and the response from other users. These question-askers didn’t expect or seem to want this content on their FYP.

But on the other hand, considering the population of East and South Asia, maybe the real anomaly is how low the representation is on the other platforms. Maybe the real answer is “This is what it looks like when diverse voices are being lifted up.” Below you can see the breakdown of how many posts we saw on each platform, in terms of ethnic diversity.

This is probably a question that is impossible to answer based on our data and methodology, but I think it’s important to point out that there is more than one way to approach data interpretation here, and that makes it worthy of more study.

Gender diversity

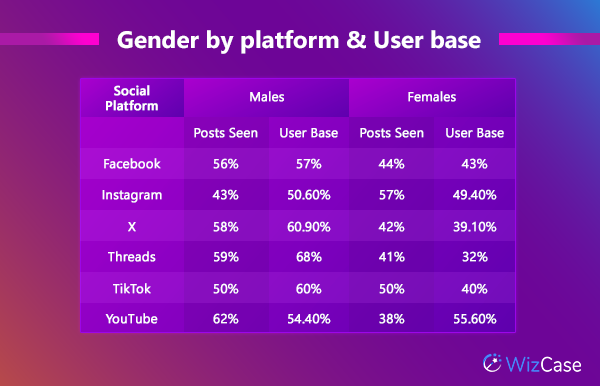

We found that TikTok had the most gender parity, with a 50/50 representation, and YouTube had the least, with 68% males and 32% females. Instagram was the only platform with more females than males (57% and 43%, respectively). What is interesting about this data is when compared to platforms’ user bases, we can see that with the exception of YouTube, men are actually underrepresented and women are overrepresented.

These findings were largely in line with the different platforms’ content categories and perceived gender roles and interests. YouTube’s content categories, for example, were news, sports, and outdoor-type hobbies, all of which are typically male-dominated. Conversely, Instagram is often associated with health & wellness, fashion, and lifestyle content — which are traditionally considered feminine.

Harmful content

X has been gaining a reputation in some circles for its explicitly hateful content. So, on the one hand, I was unsurprised that much of the content we were served by X was objectively negative, violent, or hateful. What I did find surprising was that, aside from a couple of videos on Instagram (that were tame in comparison), no other platform showed us this level of harmful content.

Here’s a breakdown of what, exactly, we were shown:

| Negative content on X | ||

| Content | Quantity | Example |

| Crime | 1 | CCTV footage that appears to depict 2 Black people robbing a store |

| Degradation of women | 2 | A man berating a woman for not going home with him after having bought her dinner |

| Depicts a death | 2 | Video of a suicide, image of a dead body from the Ukraine war |

| Police violence | 3 | Video of an officer-involved shooting |

| Portrays a severe injury | 2 | Teen beating up another teen at school |

| Sexual content | 1 | Girl offering explicit pictures |

| Sexual harassment | 1 | Video of a woman being touched by a man trying to take a picture with her |

| General violence (fights, etc.) | 10 | Cell phone video recording of a White man throwing a White woman off a pier into the water |

This research was done by one person in one day, with no input from the researcher (they did not search for anything, nor did they Like or comment on any post). This rapid devolution of the For You tab is similar to my own experience on the platform.

Much like YouTube, X’s content algorithm is extremely responsive to user input, at times requiring only the barest minimum of input. For example, in my own use of the platform, I don’t Like posts from people I don’t know and nearly never comment. Nonetheless, if I read the comments on a couple of posts, my For You tab becomes dominated by that topic — whether I want it to or not.

The ease of account setup, the ability to boost an account by paying, and the robust content algorithm that seems to only require minimal interaction add up to the near inevitability of seeing content users may find disturbing in some way.

Political bias

X and Threads only served content on one side of the political spectrum: X was right, and Threads was left. This feels pretty intuitive, as X has a certain reputation for conservative viewpoints, and Threads was a place that many liberal voices moved to after Twitter’s purchase.

TikTok showed us only one instance of political content, which makes sense because its algorithm amplifies what you search for and what you interact with. The fact that we didn’t search for anything and didn’t interact with anything that wasn’t organically served to us, means that TikTok was merely showing us what’s popular in our location and then content similar to that.

At first glance, YouTube and Instagram are the most balanced platforms in terms of political representation — because there wasn’t any. However, I do want to stress that this is totally based on our starting points. Had we chosen content based on our interests, it’s likely that we would have encountered political content at some point.

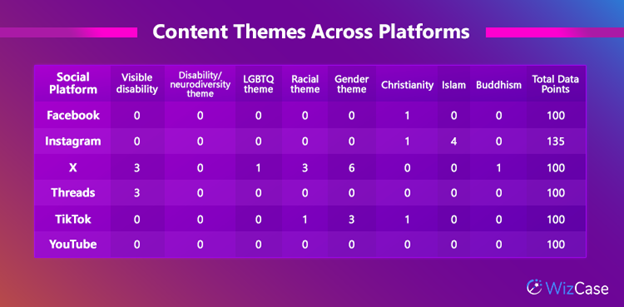

Content Themes and Topics

The problem with quantifying race, gender, disability, religion, and sexuality without asking the involved party is that you have to rely on incomplete information. There were some categories where we did not feel comfortable choosing a label for someone else, so we simply did not. Instead, we counted whether there was a post with that theme or topic. We also felt it was important to look at the themes of posts because content is a part of representation as well.

Overall, we saw very few themes in the categories that include non-visible states: religion, disability, and LGBTQ+. Instagram had the most depictions of religion, and the majority were about Islam. However, this is likely related to the relatively high representation of Asian and MENA content as well as highly distinctive religious dress.

Although we did see depictions of disabled people, we saw barely any discussion of disability. In one way, this is representation and that is positive. But because there are so many invisible disabilities, it was quite surprising that no platform had any content on disabilities or neurodiversity.

There was a single instance of LGBTQ+ themes, which was on X and was negative.

Methodology: What We Did

When considering this topic, we quickly realized that we needed to ensure our results were objective and impartial. In order to achieve this goal, we connected to a VPN server in the US and created new accounts on Facebook, Instagram, Threads, X (formerly Twitter), TikTok, and YouTube.

Because some social media apps use more than your IP address to determine your location (for example, your cell phone carrier and device identifiers), we used platforms’ websites on browsers in incognito mode. This ensured that our location would really be identified as the US.

We had some issues with the Meta group of apps (Facebook, Instagram, and Threads). No matter how our researcher tried to create a new account in a browser, they kept being flagged as a bot account.

In order to conduct the research, they had to sign up via the mobile app using a VPN and Hushed (an app that enabled them to create a temporary, US-based number). After successfully creating an account on Facebook, Instagram and Threads were easier to set up because we could use our Facebook account to link accounts.

The fact that we had to link our Facebook account with the Instagram and Threads accounts is less than ideal, however, you can’t create a Threads account without an Instagram account and we couldn’t create an Instagram account without linking our Facebook account. Since we didn’t follow any accounts on any platform, nor did we like or comment on posts, influence from Facebook should have been minimal.

We also had issues with YouTube. It was easy enough to create an account, but when we allowed YouTube to auto-play videos, it showed us 10 videos in a row from the same account that were all thematically related (all music videos from VEVO accounts, for example).

Although this is notable, it’s not necessarily how people interact with YouTube and it’s also not an accurate representation of the types of content users are served. In order to be objective, what we did instead was click the top recommendation that was from a different account.

Using this methodology, we were able to measure what types of content are randomly shown — before content algorithms start to curate the feeds. We looked at demographics as well as content themes, focusing on race, gender, disability, sexual orientation, politics, and religion.

Conclusion

Looking at these platforms pre-algorithm can give us a starting point for a discussion. After going through this project and viewing social media platforms from a more objective lens, I can draw a few conclusions. For one, it appears that in an effort to be “neutral”, a large number of voices are being left behind.

If one considers the population as a whole, the posts we were presented are wildly biased. Are we really to believe that the majority of the population of the US is White, cis-gendered, heterosexual men with no disabilities? Are we also to believe that this group of men are non-religious and have no strong political opinions? Furthermore, are we to believe that the only content the entire US population wants to see is from this mysterious group? Call me a cynic, but I highly doubt any of this could possibly be the case.

This leads to the next conclusion. Which is that social media is largely a reflection of ourselves. We see what we want to see, or alternatively, we see what the algorithm thinks we will engage with.

We can take my own social media accounts as an example here. My personal experiences on the social media platforms we researched are markedly different than the results of our research. I see tons of LGBTQ+ content, tons of content featuring people of color, discussions of disability, and so on. Yes, my “For You” page on X gets spoiled from time to time due to a random search or like, but it usually goes back to what I prefer it to be fairly quickly — as long as I go back to engaging with the other content.

So, content algorithms on these sites serve the topics they think you want to see. This is not to absolve any of these platforms of any responsibility. If you’re searching for fitness-related content and are served content from health grifters, there’s no excuse for that. But I seek out particular types of content from particular types of content creators, and that’s largely what I see.

This is all to say that, to me, the problem with representation is multifaceted; platforms need to do more to ensure they don’t support harmful content (looking at you, X) and that they take a different view on what “neutral” means. At the same time, users need to be mindful about what they are consuming. This means engaging with diverse accounts and seeking out opinions other than our own. Otherwise, we (myself included) end up in an echo chamber, and we lose valuable perspectives. Challenging our own narratives is more important than ever. In these divisive times, we can’t rely on an algorithm to help us out.

Data Sets

Threads

X

TikTok

YouTube

Leave a Comment

Cancel